What is clock jitter in detail?

Topic Tags

Comments

Yep. Despite Wikipedia's notorious reputation for inaccuracies,

Yep. Despite Wikipedia's notorious reputation for inaccuracies, that article pretty much delineates anything the novice would ever want to know (and a lot more) about clock jitter.

Thankfully, AD/DA has come a long, long way since the days when jitter was a serious enough issue that everyone was using outboard clocks.

The Wiki article is detailed but general. In the field of record

The Wiki article is detailed but general. In the field of recording, all one usually needs to know is that clock jitter is the variation in time of a sampling instant about the ideal time, and apples to signal conversion between analog and digital domains in both directions, i.e. both A-D and D-A conversion. Since the analog waveform is constantly changing, a variation in time translates to a variation in amplitude, and hence an error in the converted signal. This will show as non-harmonic distortion.

Scott Griffin, post: 346150 wrote: Yep. Despite Wikipedia's not

Scott Griffin, post: 346150 wrote: Yep. Despite Wikipedia's notorious reputation for inaccuracies,...

I think that (well deserved) reputation comes from pop culture, history, politics, and the "soft" sciences. I find Wiki to be as reliable as any other general reference source that I know of in math and classical physics. Compare it to a modern Encyclopedia Britannica. Wiki is also more comprehensive and the price is right. As always, check it twice. In the word of Ronald Regan, "Trust, but verify." (Which means, "Don't trust.")

BobRogers, post: 346251 wrote: I think that (well deserved) repu

BobRogers, post: 346251 wrote: I think that (well deserved) reputation comes from pop culture, history, politics, and the "soft" sciences. I find Wiki to be as reliable as any other general reference source that I know of in math and classical physics. Compare it to a modern Encyclopedia Britannica. Wiki is also more comprehensive and the price is right. As always, check it twice. In the word of Ronald Regan, "Trust, but verify." (Which means, "Don't trust.")

As someone who moonlights in the medical field, I can assure you, Wiki blows it in that department regularly.

I certainly agree with Boswell's comments but I do have somethin

I certainly agree with Boswell's comments but I do have something to add. In just about all spec sheets for audio interfaces jitter is quoted as error between consecutive clock edges rather than the more key aspect, as Boswell infers, of errors in absolute time. Nor have I seen any figures given for clock jitter when externally referenced. Phase noise (particularly in PLL's) when clocks are slaved can be very poor and often do not follow the WIKI suggestion of Guassian noise. This is one of the areas where I certainly think improvements can be made and I think will only happen if we can get better specification of jitter from the manufacturers. Indeed, what is mostly quoted as jitter, to my mind at least, is not a true measure of jitter.

OK this is the tech talk forum, so my question is how far do we want to go into this topic?

Scott Griffin, post: 346309 wrote: This forum could use a topic

Scott Griffin, post: 346309 wrote: This forum could use a topic like the legendary 96K argument on George Massenburg's old (now defunct) forum on Musicplayer....

Go as deep as you like, and offer as provocative an opinion as you dare! *grin*

Maybe the moderators should decide that! :

Take all the fun out of it, then. :P In all seriousness, I'd be

Take all the fun out of it, then. :P

In all seriousness, I'd be intrigued to hear your dissertation and, more specifically, how you believe it affects us these days, post the rise of multi-bit error correction, inexpensive-to-produce mag shielding, oversampling, and other formerly renegade ideas that have become industry standard cures for the jitter bug (pardon the pun).

What sparked this thread was reading this the other day: I'm gue

What sparked this thread was reading this the other day: I'm guessing, nothing has changed? Excerpt from: What is clock jitter? http://stason.org/T…

Clock jitter is a colloquialism for what engineers would readily call time-domain distortion. Clock jitter does not actually change the physical content of the information being transmitted, only the time at which it is delivered. Depending on circumstance, this may or may not affect the ultimate decoded output

As someone who experiences dropouts and other irregularities oft

As someone who experiences dropouts and other irregularities often enough to cause concern, I beg the both of you to go on.

I am a novice compared to many here, but a quick study.

My current setup is somewhat reliable - not good enough.

While this may or may not affect my problem or solution (I suspect a faulty unit), I still jump at the chance to learn something about my craft.

So go on, Scott, and Mr. Ease, please!

Oh Boy, what have I let myself on for! First of all, due to the

Oh Boy, what have I let myself on for!

First of all, due to the technical nature of this I think it is best from the point of view of my available time and helping understanding if I do this in bite size chunks.

Let me be clear from the outset that I am viewing this purely from the engineering aspect but will do my best to present things in relatively laymans terms. Not an easy task I assure you.

OK so the first thing to bear in mind is why "jitter" is not (normally!) desireable. In the audio sampling world, it has already been stated (via WIKI and other links here) that variation in the absolute (perfect) timing will cause small errors in the actual sampled signal. This is simply because the analog signal is continuously varying and if we change the time of the sample, the signal will also change. As far as the DAW is concerned, it HAS to assume that all samples were taken in perfect time (it has no other reference) so we can directly infer that any timing errors caused by jitter will cause some form of distortion to the sampled waveform.

Now the explanation on the link provided by audiokid talks about how fast the clock edges are (which is where we sample) cause the jitter. That is not strictly correct as it is actually noise that causes the jitter. There is no mention in that page at least of what is the key factor which is called "phase noise". Phase noise is present in all clock signals and is directly linked to jitter.

Various references correctly point out that (I believe) all interfaces use a crystal controlled clock oscillator. While these present by far the lowest phase noise at reasonable cost, the phase noise of crystal oscillators will also vary depending on the particular crystal and oscillator circuit. However it is not very difficult to produce a good design. What is far more critical though is to ensure that the oscillator is not degraded by external effects such as power supply noise and various local digital signals. This is an important point as, while the inherent noise of the oscillator should be guassian as mentioned on the WIKI article, external noise sources could cause jitter that is not only not guassian but also contains discrete signals, say from a local digital signal. This could certainly cause sampling errors with discrete signal distortion which most likely going to be much more noticeable.

I think that's enough for the first bite of the apple!

P.S. I am writing this stuff "on the fly" so I may well make some silly errors. Hopefully Boswell will be able to spot these and help me out!

MrEase, post: 346332 wrote: Maybe the moderators should decide t

MrEase, post: 346332 wrote: Maybe the moderators should decide that! :

By all means go on discussing the topic of clock jitter - such discussions are a big reason for having this forum. It would be helpful to keep the contributions grounded in fact rather than in opinion or hearsay, but different people's differing views are what makes for a good discussion.

soapfloats, post: 346379 wrote: As someone who experiences dropouts and other irregularities often enough to cause concern, I beg the both of you to go on.

I am a novice compared to many here, but a quick study.

My current setup is somewhat reliable - not good enough.

While this may or may not affect my problem or solution (I suspect a faulty unit), I still jump at the chance to learn something about my craft.Clock jitter normally causes problems below the level of dropouts or bit errors, but if severe, can lead to those as well. It's an inexactitude in time that is usually small enough not to exceed bit error limits, but to cause quantisation errors due to the temporal displacement of the sampling instant.

First of all I would echo what Boswell said regarding Soapfloats

First of all I would echo what Boswell said regarding Soapfloats dropouts. Maybe one question arises though, are you (Soapfloats) using interfaces sync'd to master or other clocks? If so the possibility of dropouts due to jitter will increase significantly but still should not be enough to cause dropout problems.

OK, that said, now for a shorter byte. If we now assume we have a good, low phase noise (i.e. low jitter) oscillator, it will be running at a very much higher frequency than the sample rate. The actual frequency will normally be the LCM (lowest common multiple) of all the sample frequencies the interface allows although it may, for various reasons, be higher. This means the frequency will be divided down to suit the required sample frequency selected. Now dividing high frequencies also lowers the phase noise, the bigger the divider the lower the phase noise. This follows a simple formula but there is a limitation on phase noise floor at the output which is dependent on the logic family of the divider. Logic families such as ECL (probably never used in this application) are the worst, followed by LSTTL, TTL, CMOS etc. If you are not familiar with these terms, it doesn't matter, I'm just making the point about different options for the designer. Normally the dividers in our interfaces will be either CMOS or perhaps be implemented in what is called an FPGA. An FPGA is simply a programmable logic chip. These can implement all sorts of logic functions including dividers. With FPGA designs care must be taken to ensure that any dividers are as free from jitter as possible but in general they perform well.

So now we should have a good low jitter clock source for our interface and in general, this is the case.

Next up is how we measure jitter and later, what happens when we start trying to slave various interfaces to a clock reference....this gets a lot more tricky!

P.S. I missed an important point! The jitter - in terms of absolute time - at the output of a divider will in essence be the same as the jitter from the oscillator, again in absolute time, with perhaps a tiny degradation due to the noise of the divider itself. Note that this is not the same as the cycle to cycle jitter spec's often provided by manufacturers.

To Mr. Ease: My setup is as follows: Presonus Firestudio, and tw

To Mr. Ease:

My setup is as follows: Presonus Firestudio, and two Digimax LTs as ADAT 1 & 2.

There are periodic, but consistent issues w/ clocking (FS as master, Digi won't sync sometimes; Digi as master, FS will sync, but dropouts occur. Sometimes total stoppage of tracking).

I've tried changing which unit is master, how it's clocked (FS v. ADAT v. BNC) and changing sample rates. These issues occur at random regardless.

This is why I have my finger on the trigger for an RME FF800.

Again, jitter probably isn't my issue. But, I saw the opportunity to spur a discussion I could learn from, if not solve my issues.

Or, I smelled a good ol' good one in the works, and thought I'd provide the spark.

Please keep it coming w/ your bites - a little of it is over my head, but I'm following!

To Soapfloats, As all your gear is from Presonus so I am surpri

To Soapfloats,

As all your gear is from Presonus so I am surprised that there is an issue. The most curious thing is that the Digi's sometimes won't sync to the firestudio. Unfortunately though the problems you see do not seem to be related to jitter and I would suspect the Firestudio drivers. This does not explain though why the digi's sometimes fail to sync - unless the firestudio clock is not consistent. I have no experience with presonus gear but I have heard of quite a few people with driver trouble. This is primarily from the Cakewalk Sonar forums where several people have given up and got rid of such problems by changing their interface! Apparently this is something to do with the DiceII chipsets. A common piece of advice with Firewire is to use Ti chipset ports on your PC or Mac.

If I were you I'd be trying to get some support from Presonus but I have no idea how good their support is....

OK, so how do "we" measure jitter, or rather, how do I measure j

OK, so how do "we" measure jitter, or rather, how do I measure jitter!

I have already mentioned that all the spec's on this I have seen give a figure for cycle to cycle variations in timing. To me, as an engineer, this means virtually nothing as it gives almost no information that is crucial to audio sampling. There is no doubt though that a lower figure for jitter should represent a better product, that is not necessarily the case. If we knew absolutely that the phase noise was a guassian distribution then it would but as I have already said, that is certainly not an assumption we can make.

In answering Soapfloats in my last post here I looked up some spec's and noticed this:

Jitter

Jitter Attenuation >60dB, 1ns in=>1ps out

This seems to be a more detailed spec. than normally given but still the first line tells me very little. This is an RMS figure, so clearly the peak errors will be greater, it also mentions 20Hz - 20kHz. This gives me a very good idea of how this was measured but still tells me very little about real world performance. Are there any discrete signals in that 20 - 20k bandwidth, is it white noise, pink noise or what? The answer to this will give me some idea but the bottom line is I simply would not measure jitter in this way.

The second line gives us information about the performance when locked to an external source although I would defy the layman to know that. Having said that, the >60dB does tell me something about the PLL performance but is that a good or indeed necessary requirement? First of all, I do not believe for one second that using an external source could provide the apparently improved jitter performance of =>1ns over the internal clock spec of

As I have already implied (I think!) if you are using a single interface and not slaving any other soundcards, jitter should really not be an issue as a single clock determines the timing of all channels of the interface and unless the design is poor in respect of allowing PSU or digital noise degradation then this will be the case. While it is important that the clock is good enough to all but eliminate distortion on a single channel, it is also important that all channels in an interface sample at the same time. It is not really sufficient to say that differences in distance between microphones already skew the timing of signals. From the engineering viewpoint this is a different problem as it would not cause distortion in the sampling process itself. The biggest problems with jitter arise when we slave one interface to another. Now using the real spec given above you might think that we should always get two samples within 20ps of each other - if that were the case we really would not have any problem or debate about clock jitter. What the spec does not tell us how many consecutive periods we might get of minus 20ps (for example)and that is important as it would tell us what the maximum timing error between supposedly indentically timed samples might be.

Testing for this figure is not quite so straightforward as cycle to cycle testing but does reveal an awful lot more about what our real timing errors are going to be. The problem with absolute testing is that we have no perfect standard so the best technique, which actually shows real world results, is to measure one clock against another in time. If we have a reference clock which is the best available and then use it to reference two identical interfaces we can then measure the maximum difference between the two interfaces. This is a real result and we can also analyse the characteristic of this noise to identify any problems. Of course the performance of a single slaved interface will have half of this measured error (with two identical interfaces) as we are measuring the sum of two errors. I have used this technique for over thirty years and can also say that, in the satellite comm's world, this is how jitter is really measured and specified, as cycle to cycle measurements just do not convey enough information.

I'm not sure if any of this is going to come over clearly. If not, please let me know and I'll try to clarify it all.

Hi Folks, As putting these thoughts on jitter is somewhat time

Hi Folks,

As putting these thoughts on jitter is somewhat time consuming, could I ask for a bit of feedback on whether my ramblings are of interest?

So far the only response is from soapfloats and writing stuff like this on a forum is a bit like shouting into a black hole - you have no idea if anyone is interested. Also there don't seem to have been that many hits on the thread (since I started my ramblings) so currently I just don't know if this is worthwhile continuing. Of course I'll continue if you are interested and don't find it too boring!

To paraphrase a well known saying, in cyberspace, no one can hear you yawn!

Yes, it's fine, and it's unlikely that people would want to take

Yes, it's fine, and it's unlikely that people would want to take issue with what you have said.

The relationship between jitter and uncertainty of sampling instant used to be clear cut in the early digital audio days of non-oversampled converters. These days, the actual clock into the A-D or D-A converter is of several MHz, phase-locked to the sampling clock. The phase-locking process can smooth out jitter in the sampling clock, but at the same time brings its own jitter problems, so without examining in detail the design of a particular piece of gear, it's difficult to say what is actually the dominant factor in the jitter specifications.

Thanks all for the response. I will continue asap but I'm a bit

Thanks all for the response. I will continue asap but I'm a bit pushed for time over the next few days!

Boswell, post: 346860 wrote: Yes, it's fine, and it's unlikely that people would want to take issue with what you have said.

The relationship between jitter and uncertainty of sampling instant used to be clear cut in the early digital audio days of non-oversampled converters. These days, the actual clock into the A-D or D-A converter is of several MHz, phase-locked to the sampling clock. The phase-locking process can smooth out jitter in the sampling clock, but at the same time brings its own jitter problems, so without examining in detail the design of a particular piece of gear, it's difficult to say what is actually the dominant factor in the jitter specifications.

I can't agree more. This is part of why I find that the way jitter is currently specified to be next to useless. View it as my personal crusade to produce meaningful spec's!

Keep it going in your own time. Most of my contemporaries on thi

Keep it going in your own time.

Most of my contemporaries on this thread are a great deal more well-versed than myself, but I know we all share a commitment to a better understanding of every aspect of our business. Regardless of perceived relevance, we should all at least be aware of such things and be able to discuss them.

Thanks for putting in the effort.

Well, so far I have tried to cover aspects of jitter related mai

Well, so far I have tried to cover aspects of jitter related mainly to using a single soundcard and why I regard the way jitter is currently specified to be almost useless.

If that raises any questions please let me know and I'll try to tackle them.

In general, with a simple set up, with a single soundcard, jitter should never really be a problem and if it was I could only recommend ditching the particular soundcard.

Things get more complex when slaving several soundcards together, or even using a set up such as soapfloats who uses a single soundcard with two input expanders synchronised via ADAT (or SPDIF or Wordclock!). For this scenario, soundcards generally (but not always), provide the facility to lock the internal clock to an external source. This could arrive from one of several sources as I have already mentioned in several different formats, namely SPDIF, ADAT, wordclock or, occasionally, a proprietry link between soundcards from the same manufacturer.

In this case, the soundcards master oscillator that I have already discussed, requires some method of varying its frequency to enable it to be locked to the external source. To achieve this lock several things have to happen.

First, the source clock data needs to be processed to extract the word timing (if not already a wordclock input). Both SPDIF and ADAT contain a lot more information (E.g. Audio data!) than the basic data rate but for synchronising clocks the data rate is all we want or need. Once we have what is effectively the wordclock for the source then the clock must be evaluated to determine its sample frequency, be it 44.1kHz, 96kHz etc. Once this is done, the master oscillator dividers can be set up to match the incoming data. Naturally whatever the sampling rate, the wordclock runs at a much lower frequency than the master oscillator so we must now have a circuit that locks the slaved master oscillator to the incoming source. This is called a phase locked loop (PLL) and there are many, many ways in which this can be implemented.

While these circuits are in essence quite straightforward, I have come across many designs over many years that fall well short of the desired performance. In one notable case, a client thought performance was OK (contrary to their customers) - simply because they tested the circuit the same way as soundcard manufacturers. In this case not only had I to point out the error of the test method but also ended up completely redesigning their PLL as it was clear it was hopelessly inadequate nor did they grasp many the basics of PLL design. Unfortunately I have come across this many times over the years - don't get me wrong though, it's good business for me!

Now if we take these more complex set ups where one or more devices are slaved to a master clock the whole situation becomes more variable. As Boswell pointed out, without knowing the specific circuits we can't easily surmise where problems might arise or what the "real" timing errors of these systems might be.

More later...

Well it is now much later than I expected! So hopefully I have

Well it is now much later than I expected!

So hopefully I have now explained reasonably clearly how we lock one clock to another. The only note to make is that we now have a master oscillator that can be shifted in frequency but when used on internal reference, the soundcard will use a fixed voltage to set the reference frequency. The addition of this control system inevitably contributes additional phase noise but unless the designer has been very careless, the extra noise should be insignificant.

So what happens when we lock one reference to another and use both soundcards for recording. Well first of all, with the spec's I gave previously:-

Jitter

Jitter Attenuation >60dB, 1ns in=>1ps out

Given that spec you might reasonably expect that given two soundcards with these specifications that the maximum variation in sampling time would perhaps be

Not even close I'm afraid! This, again, is precisely why I hold that the way jitter spec's are currently presented are next to useless. Not only that but without considerably more information we cannot even hope to calculate the real situation.

What we certainly cannot expect is that when a certain cycle has a minimum period (i.e. clock period - 20ps) that the next cycle will somehow magically balance the error with a period with an extra 20ps. In fact it is normally very much the opposite. In addition, while the example spec. I have used states RMS, 20Hz - 20kHz, it is a fact that the noise will, as a result of being essentially guassian noise, have more significant components nearer to DC. There simply is no relation to the "audio" bandwidth suggested by the spec. that makes the sub 20Hz performance irrelevant. This lower frequency noise (jitter) cannot be ignored when evaluating the overall timing of a clock and this is considerably compounded when two clocks are slaved together. While the jitter in this bandwidth can certainly be measured, to present the spec in this way is simply masking the truth.

I was intending to write more in this session but have run out of time. I'll be back later.

On a side note, and also related to my issue: All of the literat

On a side note, and also related to my issue:

All of the literature from Presonus suggests that the device doing the most work (in my case, the Firestudio) should be the master.

The ADAT devices should be set to slave. Now, in my case, at least one of these devices has issues doing that.

Assuming they all play nice, is the above the truth?

I only ask b/c you refer to having the main clock or oscillator lock to external devices.

Is there a preferred master-slave relationship?

Or is it a matter of (esp. in my case) go w/ what has the most stability?

And does this relationship have an effect on jitter?

If the answer adds to the discussion, please provide it.

If not, continue on.

soapfloats, post: 347276 wrote: On a side note, and also related

soapfloats, post: 347276 wrote: On a side note, and also related to my issue:

All of the literature from Presonus suggests that the device doing the most work (in my case, the Firestudio) should be the master.

The ADAT devices should be set to slave. Now, in my case, at least one of these devices has issues doing that.Assuming they all play nice, is the above the truth?

I only ask b/c you refer to having the main clock or oscillator lock to external devices.

Is there a preferred master-slave relationship?

Or is it a matter of (esp. in my case) go w/ what has the most stability?

And does this relationship have an effect on jitter?If the answer adds to the discussion, please provide it.

If not, continue on.

First of all I had not realised I had implied that the master clock should be slaved to another. I'm not sure where that is, so I'll have to read back! The key here is that the master clock is defined as being the source clock with other devices slaved to it. If you change which device provides the source clock, you have effectively changed the master.

In your case then certainly the Firestudio should be the master. With your set up, using one of the DIGI's as master and slaving the Firestudio to that would be an option but you should also slave the second DIGI to the first DIGI either via wordclock or SPDIF. If you slave the second DIGI to the FireStudio, while it should work, you have no overall master and jitter will accumulate. As one of the DIGI's appears to have a fault and will not lock reliably to an external source you may have no option but to use this one as master. If we assume that the clock circuits in both the DIGI's and Firestudio are the same (not a safe assumption by any means) then this should not degrade the overall performance. That is the rub, there is no way of telling from the spec's which configuration would give the best performance. As one of your DIGI's is dodgy (!) and presumably not warranted, it may be worth giving the insides a close inspection for dry joints etc. as it seems to be intermittent.

I intend covering more on master/slave set ups later.

Just another quick thought for soapfloats. It could be that the

Just another quick thought for soapfloats. It could be that the reason the faulty DIGI will not lock reliably is due to a fault in the oscillator (among many other things of course). It is therefore possible that using this DIGI as the master clock could also mean overall increased jitter. The only way to tell for sure is to get the faulty DIGI tested and preferably fixed.

I've managed to find a bit of time to get some basic photo's tog

I've managed to find a bit of time to get some basic photo's together!

Rather than just spout on about how jitter arises and what the spec's mean I think these photo's will show what happens a bit more clearly. What I've done is to use my Yamaha 01V (original) together with my original MOTU828.

These are linked together via ADAT lightpipe and I've used both devices as the clock source to measure the relative jitter on the S/PDIF outputs. In both devices the cycle to cycle jitter is in the order of a few tens of picoseconds (as are, I expect, just about all soundcards that are slaved or not). I've never bothered to do this before and the exercise has proved what I expected but also thrown up one small oddity I did not anticipate.

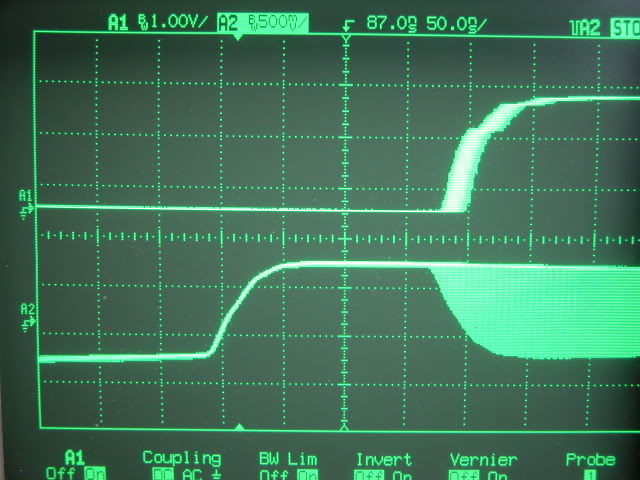

OK, the first photo is of the 01V slaved to the MOTU on a coarse scale to see the timing variations. I'm afraid my "best" scope is currently dead so I have used an older digital scope which is quite adequate to show the problems and also allows me to display the full range of the timing variations over a period of time.

The lower trace is the 01V S/PDIF output and the upper is the MOTU output in all cases. Horizontal scale is 50ns per division. The first thing to notice is that the 01V output is delayed by approximately 250ns relative to the MOTU. This is a systematic error and although a perfect system would show the signals in perfect sync., in practise a fixed delay of 250ns is not large enough to cause any phasing issues. Bear in mind that the period of 20kHz is 50us so 250ns equates to 1.8 degrees of error - absolutely insignificant compared to moving a mic a fraction of an inch! What you can also see is quite a timing variation on the upper trace. Bear in mind I am always triggering the scope from the 01V signal so that variation is effectively the jitter on the 01V output relative to the MOTU output. This is shown more accurately later but note that the relative jitter is around 20ns or so. Forget cycle to cycle jitter, this measurement is what is REALLY important as this shows that timing of when you will actually get your samples.

Photo 2 is the same set up but with the MOTU slaved to the 01V.

Here we see that the jitter is similar but this time the MOTU output is delayed realtive to the 01V but this time by the smaller amount of 200ns. Again, for our purposes this is not really relevant. The amount of jitter is similar to the first photo. What I cannot easily show you here is the "nature" of the jitter, so I'll just have to try and explain the differences I noted. The jitter caused when the 01V is slave is apparently relatively wideband and appears to be essentially guassian noise. Without resorting to my spectrum analysers (and I'm NOT lugging them from my lab to the studio) I don't know what the full bandwidth of the noise is so this is just my judgement. With the MOTU as slave, as we can see, the magnitude of the jitter is about the same but the character is quite different and is predominantly low frequency (you can actually see it bouncing around as the scope picture builds). These different characteristics will alter how the sampled signal becomes modulated by the jitter.

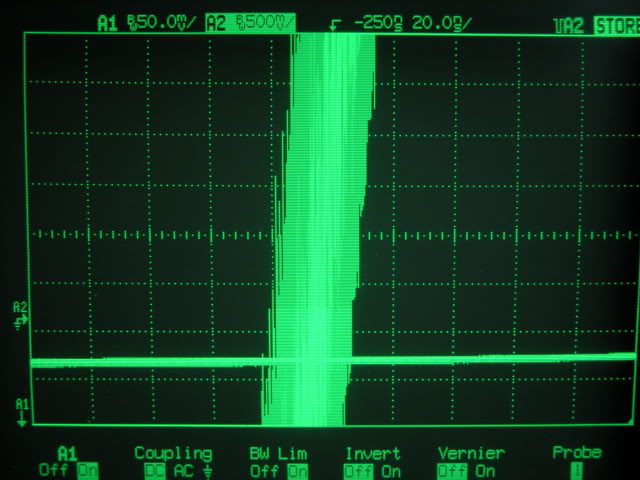

The third picture is the same as #1 but zoomed in to see the jitter more precisely showing the peak to peak jitter of around 26ns

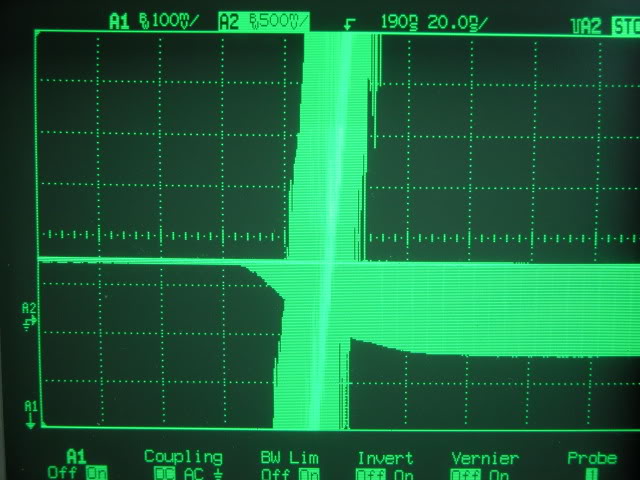

#4 is as #2 showing about 23ns jitter.

While I was doing these tests, I was storing the results over about 45 seconds or so which seems adequate to catch all the variations however I did get diverted and noticed the MOTU, when slaved, occasionally has a "blip" which causes a significant increase in the jitter. This is shown in the last photo, #5.

This happens infrequently (every few minutes or so) and I can only ascribe this to some systematic fault in the logic of the system. It certainly does not appear to be a character of the phase locked loop circuit.

The most important thing to take from these photo's is that the real world jitter is actually in the order of tens of nanoseconds rather than the tens of picoseconds that it seems the manufacturers specifications would have us believe and also there is simply not enough information in the "cycle to cycle" measurements for us to calculate the real world jitter figures that I have given here.

Next will be a few simple calculations to show roughly what all this means to our recordings!

PLEASE NOTE (AS KINDLY POINTED OUT BY BOSWELL) THAT I HAVE MADE

PLEASE NOTE (AS KINDLY POINTED OUT BY BOSWELL) THAT I HAVE MADE A CARELESS SLIP IN MY MATHS IN THIS POST AND THE RESULTS I GIVE ARE FAR TOO OPTIMISTIC! PLEASE READ FURTHER POSTS IF YOU ARE INTERESTED.

Sorry but it's been some time since I could add to this thread, so I'll just get on with it for now!

Now I have shown some fairly typical, measured, results in measuring the true timing jitter of slaved clocks, we need to see what kind of effect it will have on our audio fidelity. I will first make a couple of assumptions.

- The highest frequency of interest to us is 20 kHz.

- The maximum level of the converters is 2 V p-p (peak to peak) before clipping.

These are straightforward assumptions however I'm sure some will contend the benefits of having audio bandwidth up to 40 kHz or more. If you are in this camp all you will need to do is adjust the appropriate figures I will give you.

So if we have a sine wave at 20 kHz, 2V p-p then the maximum slew rate of the wave is (approx) 125 V/s. If we now take the measured results of the jitter as 25 ns (I like round figures!) then the maximum possible variation on a sample is 3.125 uV. This is spread both sides of the ideal timing so the error is around +/- 1.55 uV. I will term this as the "maximum jitter modulation". This is 116 dB below 0dBFS and certainly, for my converters at least, is below the converter noise level.

That is not quite the end of the story though as the perception of a signal at this level depends on what the signal is. If the jitter noise happened to be the result of a sine wave modulation, the signal created by the jitter would be a similar sine wave. Because coherent signals can easily be perceived below noise then such jitter might be noticed, particularly in quiet passages (sadly not likely with a modern compressed CD).

This is why the nature of the jitter is important. In the two examples I gave, my 01V gave what appeared to be pretty random phase noise (note that I could not directly measure this with the set up I had) and therefore the jitter modulation is non coherent and therefore much less likely to be perceived. In the other instance, the MOTU 828 had what appeared to be predominantly very low frequency jitter and such modulation would result in signals outside the audio bandwidth. Relate this to the specification I gave much earlier, in reference to Soapfloats querie's, where the jitter bandwidth was given as part of the spec. Although that particular spec. tells us very little of what the actual jitter is, it does tell us that the jitter should not cause significant jitter modulation within the audio bandwidth.

I hope this gives some idea what the real magnitude of the problems arising from clock jitter really are. I hope that this description has been both useful and understandable and that will now have an appreciation of what clock jitter means in the "real" world. In essence, unless you have very poor clock circuits, it is very unlikely to impair your recordings. Of course it would be nice to have things perfect but it is also good to appreciate the engineering compromises we designers always have to make.

For those interested this last bit explains a little bit more of the maths and the reasons for the assumptions I've made in the calculations. Please skip it if you're not bothered by it.

A sine wave is described mathematically by Vout= A*sin(wt) (using w to represent omega), where the magnitude of the wave is set by A. In this case A=1 gives a sine wave of 2Vp-p. To find the maximum slew rate, we differentiate this expression with respect to time to give dV/dt (the rate of change of voltage) = w cos(wt), this is a maximum when cos(wt) = 1, hence dV/dt(max) = w. w = 2*pi*frequency = 125.663 V/s.

While I fully appreciate many musical instruments can produce much faster transients than this, the only implication is that frequencies above 20 kHz are present, hence this is a sensible assumption. I appreciate that many electronic circuits can also create high order distortions within the audio band as a result of these higher frequencies. The fact is that even with a 20 kHz square wave, if we could put that through a perfect "brick wall" 20 kHz LP filter, then there would be no dV/dt exceeding this figure. Indeed many high quality power amplifiers use a (simple) LP filter on their input stage in order to prevent fast transients causing any of the high order problems normally more prevalent in power stages.

If you prefer to take 40 kHz as a yardstick (although I have never personally seen a compelling reason to do so) then the only effect is to increase the jitter modulation noise by 6dB from the figure I calculated above, i.e. 110dB below 0dBFS.

While you may consider this to be a significant degredation to the highest performance converters it is also important to compare like with like. Converters boasting up to 120dB of dynamic range are available but bear in mind that these will be "weighted" figures, whereas the calculations I have given here are not. I could only weight them if I knew the spectral density of the jitter noise. It is also highly unlikely that you will have a recording facility with a low enough ambient noise to ever achieve such dynamic range figures in any case.

What I should also point out (as I have not made it clear) is th

What I should also point out (as I have not made it clear) is that the figures I have given are absolute worst case. It is not possible for the 125 V/s slew rate to exist more than instantaneously and this will only occur with a signal of 0dBFS at 20 kHz. Real audio waveforms will be very unusual (effectively impossible) if they contained anything remotely near this level. The net result is that normal audio data will have a very much reduced jitter modulation noise from the figures I gave. This all tends to suggest, to my engineering side, that jitter noise will very very rarely be the cause of any problems.

I hope that I have not only explained what jitter clock jitter is, as originally asked, but also shown what effect it will have and finally, that for all intents and purposes, it would never, under any normal circumstances, cause us any real problem.

Of course if you find any part of this explanation unclear or incorrect I would welcome your comments in this thread. I will then try to explain further or correct any errors. Equally if you find you agree with this explanation, please chime in as that could also add weight to what has been a purely solo effort!

Good technical stuff....always exciting and interesting to me wh

Good technical stuff....always exciting and interesting to me when I can read some tech talk!

I've been following another forum thread where the ongoing discussion is external clocks. The discussion for the most part is high end clocks....

I guess I won't mention any names but the debate is 10Mhz atomic master clocks creating better perceived audio.

The science on the one side makes claim that a properly designed internal clock and jitter suppression circuitry within a well designed converter is a better clock than using one of these 10M external master clock units. The other side claims that highly renowned, experienced producers and engineers have no problem discerning a noticeable difference in the quality of the audio being converted and monitored from their A/D/A's when using said high end clocks.....

While I lean toward the science and engineering side of this and not having any expert listening experience (I think I have good ears LOL)...or even really high end equipment at my disposal ($6000 for an atomic clock is a little out of my price range!)...do you think there is something going on here that has a reasonable explanation? Or is this high end clock thing hyped and is there possibly "listening bias" going on here with experienced people hearing a perceived improvement?

In my mind and understanding with A/D/A reaching 120db of dynamic range and possible sideband artifacts down in the -110 range....is it really possible that these extremely accurate timing circuits could produce a perceived improvement in the audio and where (if at all) could this be shown or proven?

So thanks MrEase, Boswell and Scott for your contributions to this thread!

I'm reading it.....

And FWIW RO is far more professional, open and truly free from the incredibly augmentative nonsense that goes on over there!

Thank you for that audiokid!

MrEase, post: 350248 wrote: A sine wave is described mathematica

MrEase, post: 350248 wrote: A sine wave is described mathematically by Vout= A*sin(wt) (using w to represent omega), where the magnitude of the wave is set by A. In this case A=1 gives a sine wave of 2Vp-p. To find the maximum slew rate, we differentiate this expression with respect to time to give dV/dt (the rate of change of voltage) = w cos(wt), this is a maximum when cos(wt) = 1, hence dV/dt(max) = w. w = 2*pi*frequency = 125.663 V/s.

....

So if we have a sine wave at 20 kHz, 2V p-p then the maximum slew rate of the wave is (approx) 125 V/s. If we now take the measured results of the jitter as 25 ns (I like round figures!) then the maximum possible variation on a sample is 3.125 uV. This is spread both sides of the ideal timing so the error is around +/- 1.55 uV. I will term this as the "maximum jitter modulation". This is 116 dB below 0dBFS and certainly, for my converters at least, is below the converter noise level.

Great analysis, but I think you dropped the K in KHz, making the figures 60dB worse than you suggested!

At 20KHz, the angular frequency is 125664 rad/sec, so the max gradient of a 1Vpk sinewave is 125.664V/ms or 0.125664 V/us. Your (huge) 25ns jitter translates to a 3.14159mV (pi) uncertainty pk-pk. This is -56dBFS, which would be very audible.

Big thanks to Boswell for pointing out the slip! I can only say

Big thanks to Boswell for pointing out the slip! I can only say "woops", this type of thing always happens when you try to fit these things in with the time available. I actually have the slip of paper in front of me where I have written down 125.663 V/s instead of 125.663 V/ms! I must admit that the consequent results took me by surprise and I thought had got me out of going in as deep as I originally anticipated!

Of course Boswell is correct and this is more the result I had been expecting (never having bothered to calculate this before) and which my earlier posts had been "teeing up" so to speak.

So the explanation continues with what I had originally anticipated saying.

Unfortunately I can't do this immediately but I will be back soon....

Thanks again Boswell!

Sorry I haven't been around for a while. I'm sure you realise t

Sorry I haven't been around for a while. I'm sure you realise that we all get busy from time to time.

So, the story so far is that we can see that the absolute worst case (when correctly calculated!) with the example I have given is

-56dBFS. This is pretty awful but let's look a bit closer at my assumptions.

1. With a full scale 20kHz signal the -56 dBFS level will only occur at zero crossing. Using the same formula the noise level will actually be zero (minus infinity dBFS) at the peaks (as Cos90 = 0). Clearly the "real" average figure would be somewhere between the two so things will not be as bad as it seems.

2. A full scale 20 kHz signal is not going to occur in "real" music. There is no instrument that produces a fundamental at this frequency, only harmonics. By nature, harmonics are lower in level than the fundamental and hence the chances of seeing this "worst case" are significantly reduced. Where we will get an effect is with fast transients (most common with instruments such as cymbals and some other percussion). By their nature these fast transients are almost one off events hence the effect of jitter will not be a constant noise on the signal but more accurately a tiny timing error on the transient more akin to shifting a mic a fraction of an inch!

So what we can deduce is that we are never going to get a "real" jitter noise problem that produces a continuous -56 dBFS problem. We can also deduce that in real terms any "problem" is going to be small but possibly audible. Audibility will certainly depend on the source material. We can also see that this problem will only occur on any soundcard slaved to an external timing reference. If you only use a single sound card, you really should not see a problem.

For this reason with my own set up I normally have my 01V as reference and only use the 8 ADAT channels to the MOTU, so all inputs use a none slaved reference. On the odd occasions I need more input channels I am forced to use both sound cards. In these instances I usually set up all the drums and overheads on the 01V and carefully select less harmonically intense instruments on the MOTU. Things like bass guitar and mic'd rhythm and lead guitars (bearing in mind a 10 or 12 inch speaker is never going to reproduce much above 5 or 10 kHz).

Using these methods I have never had any noticeable problem from jitter noise. I'm not saying it's not there and it may be possible to get better results but I have never had anyone point out anything other than "what a clean recording".

As Boswell pointed out my (huge) 25ns jitter, I thought I'd also comment about this as it goes back to my points about the useless way most jitter spec's are presented. I also feel that these figures will be quite typical of many of the units of similar age. What I have not tried using is the S/PDIF I/O for clocks but I doubt this would help as both ADAT and S/PDIF clocking requires a clock recovery circuit to drive the PLL's. These are not always 100% reliable (as evidenced by the odd errors with the MOTU - see the earlier photo's). It would no doubt be better to use a wordclock interface but unfortunately the 01V has no such interface. The advantage of the wordclock system is that there is no need for any clock recovery circuit and we have a "direct" signal to lock the PLL. If you can use wordclock I/O with your system, I would always recommend it's use over ADAT or S/PDIF.

Without accessing the internals of my soundcards I cannot actually measure the bandwidth of the jitter noise but as I pointed out with the photo's, the MOTU appears to have predominantly low frequency noise whereas the 01V appears to have a much wider bandwidth. This alone tells me something of the nature of the PLL designs and consequently I will now always lock the MOTU to the 01V. I had always done this previously but for no good reason.

Why will I do this? Well, as indicated in the spec's I gave for soapfloats system, the bandwidth of the jitter noise will only affect our system when the noise is within the audible range. This is because the modulation due to jitter will create noise related to the jitter bandwidth. If the jitter noise bandwidth is outside the audible range, then any modulation will also be outside the audible range.

Finally it is also worth producing some "worst case" figures for systems with better jitter noise. Say we have a system with jitter noise of 2.5 ns. This is 10 times better than my system and I would seriously doubt if any soundcards exceed this figure. The same "worst case" analysis gives us:

125000 V/s * 2.5 ns = .3142 uV which is -76 dBFS. This 10 times improvement in jitter gives us 20 dB improvement in worst case performance. Naturally this is well worth having but it is still not perfect, a further tenfold improvement would give us -96 dBFS with 250 ps of total jitter. I cannot believe this is going to be achieved so the bandwidth of the jitter noise is always going to be a factor.

The bottom line is that the PLL of the slaved soundcard needs to have a very low bandwidth (less than 20 Hz to keep it inaudible) and this is not easy to achieve when a clock recovery circuit is in use. Using wordclock's should certainly improve on this situation but whether the PLL's of such soundcards are optimised when using wordclock rather than ADAT or S/PDIF is another question.

I hope this has covered various aspects of clock jitter sufficiently. I do not intend to add to this thread unless of course you have more questions. If anything is left unexplained please let me know and also if anything is incomprehensible with the way I have presented this, again, let me know.

I will keep an eye on the thread to see if I can help more but for now I hope you have an answer to the original question!

Are you looking for something more detailed or specific than the

Are you looking for something more detailed or specific than the http://en.wikipedia.org/wiki/Jitter Wiki article