Okay, I apologize in advance for what is likely to be a very long-winded post and one that will likely spark people to reply. Recently, one of my engineers brought a project to me that was so riddled with phasing issues, it was virtually unlistenable. When I asked him what happened and questioned his mic placement technique, I ultimately determined that his phasing issues came down to a common misconception about phase correction in common DAW editors.

His problem was that, when he decided to correct for phase issues based on a multi-mic orchestral recording set-up, what he did was zoomed in REAL close on the start of the recording with his editor window - found similar appearing samples of Wavesand aligned them vertically. While this sounds like a completely logical approach to many people, there are just a few problems with the concept behind this.

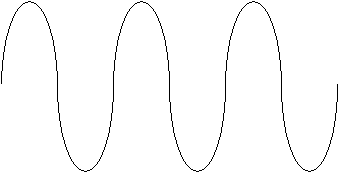

First problem: The picture of the wave that you are seeing represented to you has nothing to do with frequency. It is an amplitude wave only. If you line up, to the best of your ability, the amplitude of the sound, the frequencies contained within are still potentially out of line for phase. By doing this, all you are doing is ensuring that sounds of similar volume are reaching the microphone are "pretty close" to the same time. However, because of the distance between mics, you could have an equally strong signal at both mics which is completely out of phase.

In an amplitude sine wave, if you have a positive peak and a negative peak occuring at the same time - you don't get cancellation - you get re-inforcement. If you have two zero crossings at the same time, you have a null in sound. Whereas in frequency sine waves, if you have a positive and negative peak at the same time OR zero crossings at the same time, then you have cancellation.

Second Problem: Most DAW systems aren't truly capable of zooming down with enough accuracy to make these precision -Frequency- alignments necessary.

The simplest solutions to phasing problems are:

Solution 1: Know your math -

Sound travels at about 1142 feet per second in a 78 degree open air environment, or about .88ms per foot. So, if you have two mics picking up one sound source (in an anechoic chamber *of course*) and one of the mics is 10 feet further away than the other mic, you will be able to adjust one of the mics by about 9 ms and adjust for phase problems. However, because none of us work in anechoic chambers, you will have to take into consideration standing Wavesand reflections.

Of course, minimization of reflections is always the best bet, but standing Wavesare hard to combat. The best way to figure out the problem frequencies for standing Waveswill be, again, simple math. Find the measurements of your room that you will be recording in. For example, one of the concert/recital halls I just recorded in was approximately 60 feet long. The prime frequency that will resonate at 60 feet will be 19hz. (You can determine wavelength by dividing the velocity of sound - 1142 (feet per second) by your frequency.) Obviously, 19hz won't be a big problem since it won't be reproduced, but 38 hz, 76 hz, 152 hz, 304 hz, etc will all be affected (less the more you move away from your prime frequency). By measuring the distance between the performer and the two or more mics being used in recording this source, you should try to avoid placing mics on portions of this wavelength that will cause problems. An example would be, for 152 hz - an affected, and very noticeable frequency, your wavelength will be about 7.5 feet. Placing one mic at 7.5 feet from your performer and the other at 3.75, this phase will be completely opposite. Moreover, when the frequency is reflected back to the mics, it will again have influence on anything registering 152 hz and all of its multiples.

So, I guess that brings me to solution 2: Mic placement. Following the guidelines above as much as possible, find your best place to place your microphones. Take your time and do it right - some of these issues cannot be fixed once it's recorded.

By now, I'm sure a lot of you are realizing that there are a lot of frequencies and that there is no way to protect against cancellation in all of them. That's true, but if you are able to follow the rules stated above for all of your room's dimensions (which should be easy for those of you who record in the same studio space all the time), you will seriously minimize the possibility of nasty phase problems.

Now, that brings me to solution 3: Use your ears! Very few of us own the machinery and tools required to analyze phase across the entire spectrum, and fewer still know how to use it correctly. So...listen. If you hear a severe lack of bass, or muddy/cupped or scooped mids, or it sounds like your high frequency is being filtered through fan blades, there are phasing issues. Try to isolate what region they are occuring in and take appropriate measures. Whether it be with mic placement or adjusting your wave forms in your favorite editor. Don't get carried away though and begin adjusting your Wavestoo much. Remember - 1 foot = .88 milliseconds or 1 foot = .02 samples in a 44.1khz sampled track.

A quick note - try not to use headphones to detect phasing issues. In a discrete stereo recording, phasing issues can be inter-channel. If your right ear is not hearing what your left ear is hearing, then you won't hear the phasing issues. Use monitors whenever possible for adjusting phase.

Sorry for the lengthy post, but phasing is important and relatively easy to understand.

Thanks,

Jeremy 8-)

Comments

that's why i like to listen mono first... if everything's ok in

that's why i like to listen mono first... if everything's ok in mono it will also be in stereo...

His problem was that, when he decided to correct for phase issue

This seems to be quite a common problem with software like ProTools - people tend to trust their eyes more and seem to forget the ears. An engineer did the same thing to a classical recording of mine to correct phase issues with spot mics. Too bad he forgot that every plug-in (or routing) in ProTools causes a delay too, and he ended up having a 500 samples delay on one track plus the correction. Another problem of this method is that you can't immediately see how much delay's been added to a track. It took me half an hour to figure out what the problem was. So what I usually do for spot mics is to insert a delay, start with the measured value, listen, fiddle with the delay time, listen again and so on until it sounds right.

Thanks Jeremy! Very informative post that illustrates the danger

Thanks Jeremy! Very informative post that illustrates the danger that comes with the power of modern DAWs. Ya' can't fix everything in the mix. It also reiterates the importance of taking the time to do it right up front, before it goes into the machine.

The more I read posts around here, the more inclined I am to take more time and care tracking.

Sign-wave alignment can also cause popping when punching in on a track while in destructive record. If you zoom in on the pop close enough, it is easy to see what is happening. That's something that wasn't a problem in the analog days.

Nice post Jeremy, Thanks for the info, it has definitely added t

Nice post Jeremy, Thanks for the info, it has definitely added to what I already know about phase issues.

Good post. Now, the million dollar question..... What happened

Good post. Now, the million dollar question.....

What happened to the origianl unedited raw .wav files? Also, what did you end up doing? Re-recording? Realigning by ear? Or mabe using a special editor/plug or something like the Little labs phase box? When this happens to me and I can't re-record for whatever reason, then I only use the min number of original tracks that I can get a way with, and replace/overdub as necessary. I certainly wouldn't want to sit there for hours trying to line up tracks or phrases on a hit or mis basis until I got it right.

EXCELLENT Post, Jeremy (Cucco).... I don't get overly caught up

EXCELLENT Post, Jeremy (Cucco)....

I don't get overly caught up in "phase issues" with multi mics, at least not to the extent you described for THAT guy. Sheeesh.... As you point out, it's GOING to happen, we just want to make sure it works to our advantage, not the other way around. (Top & bottom snare mic/out of phase tricks aside, of course!)

I do a lot of orchetral, jazz and (gasp) even operatic recordings. Very often this involves multi mics in the pit (for opera), spot mics onstage for the orchestras, omni pairs overhead, and even mic pairs out in the house for surround mixes, ambience & applause.

While my DAW will indeed do sample-accurate wav zoom/views, I rarely need (or want) to go that deep - and it's pointless with two mics picking up the same sound from two different sources. (Yes, they are GOING to have some phase changes, indeed, based on the wavelengths involved, distance to each mic, and the room resonance, etc...) I only use that sample-accurate view for editing two different versions of the SAME music, like an A and B version of a track, etc.

I CAN say, however, that I listen (the most important part, vs. LOOKING at the wavform) to the music in context, in stereo, in mono, and toggle tracks individually before EVER making a change in the timeline. Of course, with the ambient mics being 70-80 feet away from the stage mics, or a stage that can be 25' wide, there are occasional timing issues, but not as much as some might think. (Remember, the players are all LOOKING at the conductor or listening to each other, in real time, and are therefore already locked in their own "analog" sync. If the mics are within 1-3 of the player, then timing & phasing relative to the other mics automatically takes care of itself )

Whenever possible/practical, I do an impulse recording, (smacking two pieces of wood together is nice, or a cracking a leather belt) I or find a fast-transient sound that someone made during sound check or rehearsal, just to "see" what kind of time delays we're experiencing, and what (if anything) needs to be tweaked.

I think perhaps what started this whole "phase" issue is the old concept of two people talking closely in lav mics, or mics above their heads, out of view of the viewers (on TV, etc.) YES, if the mics are identical and if the speakers don't move around, and the positioning not quite right, there WILL be phase cancellation (comb filtering, etc.) going on that can be audible, esp in mono. But this phenomenon is almost as difficult to create in any other way (different mics, different instruments, etc.) that it makes the argument rather pointless in any other scenario. Still, many armchair-sound engineers like to toss that term around and ask: "is the phasing OK? " (I always respond: Relative to WHAT!?!?! ;-)

True/Funny story: Not too long ago, I did a choral recording (with small chamber orchestra) for a client - one of many we've done together - and it ended up as a local, "commercial" release. I got feedback from one of the choir members, who told me (in a complitmentary way) that they'd played it for a friend, who was "in the business" and he passed on his praise, including the comment: "Oooh, this sounds great, all the mics sound like they were placed properly, and there's obviously no phase cancellation going on, either!"

HUH!?!? How could he hear THAT with a choir of 100 singers and an orchestra????

Maybe he couldn't find anything else "nice" to say about. Phase cancellation, my *ss. Hahahaha :twisted:

I'm not quite getting it, Joe: So do you delay the spot mics or

I'm not quite getting it, Joe: So do you delay the spot mics or not when making orchestral or other multi mic recordings?

> In most cases, probably not; if so, just a small amount for t

>

In most cases, probably not; if so, just a small amount for time align (NOT nec. phase) relative to the main pair. If the're front/solo mics, they're probably in the same time-line as the overheads, in respect to front to rear projection in the house.) Depending on the venue and the size of the stage (mic-hang height, etc.) there COULD be a significant delay between the spot mics and the overheads, sure. Each case is different.

If the stage is deep enough, a pair of mics on the winds or brass (say, in the BACK of the orchestra) may sound a bit early relative to the spot mics and overheads, during playback so....they may actually have to be moved backward in time a bit. (Another reason to LISTEN carefully first.) Each spot-mic should be checked accordingly if it's really a big stage, of course.

And again, the ambient mics out in the house can sometimes be VERY late as well, sometimes it may sound like a slap-back if not adjusted for the time delay. If the room is overly reverberant, it may also be best to work with it, and leave it alone (perhaps a more "spacious" sound being the result.)

I should be clearer in stating that some of these adjustments are more for MUSICAL time-align* (as such) than any attempt at "Perfect" phase adjustment. That - perfect "phase" - just isn't going to happen with a large hall, air temp changes, multiple reflections, audience's clothing, etc. across the entire audible spectrum. You can certainly zoom in close enough to get a coherent attack or start-note of a phrase, but trying to get phase-lock in this kind of situation will only make you nuts.

Hope that makes better sense.

*Musical time align - meaning that the goal of the recording is to ACCURATELY capture what happened onstage, and NOT create something new, odd or different from what the musicians intended with echo, delay, pre-delay, etc.

AudioGaff wrote: Good post. Now, the million dollar question...

Very good question AG! Well, my original thought was - I don't pay these guys so that I can fix their recordings! My second, and ultimately final, thought was - I don't want my name tarnished with a sh*tty product. So, I took the original waves and re-mixed. There are some phasing issues you can't overcome, but creative mixing can minimize these.

Now, onto JoeH:

Joe, time alignment and phase alignment are equally important! True, you will never have everything in-phase. As I mentioned in my post, there are simply too many variables. The goal is to minimize your phasing issues. Time alignment has a direct and profound impact on phase alignment. If you were to take a recorded piece with 8 tracks and not time align/phase align, you will have reinforcement and cancellation of some pretty key frequencies.

I would hope that these issues would be clearly audible to anyone recording classical music. Issues such as extreme comb filtering in the upper register - hollowness in the mids and non-existent or exagerated lows. Even adjusting the mix by just a few samples can make a profound difference.

My suggestion is to use math and use your ears - don't simply rely on what looks correct according to the amplitude sine wave.

Gee, and I always thought I'd never use this math crap after I got out of high school. ;-)

J...

I always wrongly thought phase was a problem one had when using

I always wrongly thought phase was a problem one had when using two identical mics on the same source.

So is it a Frequency and sound Delay issue?

Any good sites on the topic?

Bodhi wrote: Any good sites on the topic? What would you say ab

What would you say about this thread? I'd say we've already found one.

Cucco wrote: In an amplitude sine wave, if you have a positive p

This is an incorrect explanation -

A sine wave represents a single frequency. The time between zero crossings (or peaks) is inversely proportional to the frequency. The amplitude of the sine wave is simply that. That's in the time domain (viewing the signal on an oscilloscope). That very same waveform will show that amplitude at a single frequency (viewed on a spectrum analyzer).

There are no 'frequency sine waves' or 'amplitude sine waves'

So, if you have two sinusoids of equal amplitude & frequency with positive peaks of one and the negative peaks of the other occuring simultaneously, you get cancellation. +1 -1 = 0. The signals are out of phase.

If you have two zero crossings at the same time you aren't getting cancellation, simply because there is no amplitude from either signal. 0 - 0 = 0. These signals can either be in phase or out of phase. You can't tell without looking at each waveform either side of the zero crossing - or the peaks.

If you have two sinusoids of equal amplitude & frequency and the positive (and negative) peaks align, then you have reinforcement. +1 - (-1) = 2. The signals are in phase.

Sorry to be so nit-picky. Lots of great info in the rest of the post!

Thanks for that dpd - I had been biting my tongue and questionin

Thanks for that dpd - I had been biting my tongue and questioning my own knowledge and understanding about this issue and the positive and negative sine wave example by the original poster in particular.

At least someone else shares the same understanding.

dpd wrote: [quote=Cucco]In an amplitude sine wave, if you have a

This is an incorrect explanation -

dpd:

Agreed, I oversimplified, however, the fundamental information, when applied to most DAW software on the market is correct. Obviously, the wave being represented in a standard "edit window" is not capable of representing ALL of the frequencies present in the signal during that snapshot in time.

DAWs display relative volume in their edit windows and represent this as a sine wave. Technically, in a frequency wave, the distance between symmetrical values (peaks to peaks or 0 crossings to 0 crossings) does in fact determine the frequency. However, we are not simply viewing one frequency in a DAW window.

Therefore, what we are viewing is simply a visible representation of the amplitude (Y axis of DAW window) based in time (Y axis of DAW Window.) In physics, your postulations are 100% accurate, but in the crazy world of DAW, some laws of physics must be disregarded so the mass populous can understand the basic functionality of their product.

Perhaps, I should have been more specific in relating this information to the computer processing world. I have a tendancy though to run over with the geek speak and flub up the little stuff assuming people either don't care or don't get it. This gets me in trouble some times with fellow geeks (no insult intended - being a geek nowadays is popular, I think?)

Adobe Audition has a pretty cool tool which allows you to view the spectrum of sound based on based on spectral density in a time-relative environment. This can be an awesome tool for precision editing of specific frequencies if used correctly.

Thanks for your insight and clarification,

Jeremy

Agreed, I oversimplified, however, the fundamental information,

I know what you're getting at, but this is not the most accurate way to state it. The displayed time waveform includes all the frequencies at that period of time. It has to - physics dictates it. Time and Frequency are inexorably linked.

To view low frequencies one needs to look at a longer time segment. To simultaneously reveal the high frequencies, one needs to use more time slices in that segment. But, the time waveform on the DAW contains all the data.

A sine wave represents one frequency - the zero crossings of that waveform dictate the frequency. What the DAWs are doing is just drawing lines between two successive audio samples. They look like sine waves, but technically, they aren't - but they are comprised of sine waves. When one looks across multiple samples in the DAW edit window you are seeing the net results of all frequencies in the signal being summed in amplitude over the time period being displayed.

Unfortunately, it is absolutely impossible to disregard 'some' laws of physics - DAWs included. ;)

We are saying essentially the same thing. I'm just doing it from an EE's perspective. On to more important issues...

dpd wrote: I know what you're getting at, but this is not the m

With all due respect to you and your electrical engineering background, this simply is not correct - at least not in the world of DAWs. (And I do mean the utmost respect - Electrical Engineering is a tough job that takes a lot of friggin brains) True, in the laws of physics, frequency and time are linked. However, this is the law that is broken(or ignored if you will) for convenience sake by the DAW software. This is not to say that they somehow changed how frequencies work, they simply do not display them. ONLY 1 WAVE FORM IS REPRESENTED (not yelling, just emphasis :-)) in a DAW window. If you look at a "longer time segment, you are not seeing a lower frequency. If that were the case, if you zoomed to a 1 second segment, there would be hundreds of frequencies represented at various amplitudes. Therefore, the DAW window would be an absolute mess. Bear in mind, when one note is played, hundreds of harmonics, sub-harmonics, and micro-harmonics are present.

My point exactly - sine waves represent only 1 frequency. Therefore, if I were to record an orchestra with 2 mics - the one channel representing either mic would include thousands of frequencies at any given time. So much that the DAW window would simply look like a solid blob, not one single continuous wave.

Therefore, as you stated, you are only seeing the amplitude represented by the summation of the frequencies at that point in time. The wave you are seeing has actually nothing to do with the frequency present at all - only amplitue. The unfortunate problem that confuses people is that the DAW manufactures choose to represent this information as a sine wave, when in truth, it's simply an amplitude plot.

Alright smarty pants - I think you knew what I meant. "No laws of physics were harmed in the making of this post." No, the laws of physics aren't "disregarded," they're simply not employed at the time of the software programming. (Now that's a sign of a poor economy, when even the laws of physics can't find employment.)

Yep, essentially we are agreeing. I'm just stating my point from an Acoustical Physicist point of view. 8)

Now, admit it, half the people reading this post are rolling their eyes now saying, damnit, shut up already (to both of us), cuz let's face it, most people don't really care HOW it works, just that it WORKS...

Cheers!

Jeremy

BTW, since inflection cannot be easily displayed through type, let me just make it really clear that I'm not trying to come off inflamatory in the slightest. I truly enjoy having good clean discussions with intelligent people about things like this. Thanks for exercising the brain with me!

> THANK YOU; I've always felt that's how it works, but you put

THANK YOU; I've always felt that's how it works, but you put it into words much better than I could.

As to "rolling my eyes" and saying "Get on with it..." .....nah! It's nice to read stuff from guys who run on more than "I" do, yet still make sense.

And my compliments to both of you, for avoiding a potenial flame-war (esp, as you mention, you're both essentially in agreement) with such decorum and professional courtesy. It's REALLY nice to read a back and forth passionate discussion (however nerdy we may be) and still stay on good terms.

I just wish more people paid attention to this sort of thing....phase as WELL as a civil discussion with mutual respect. :wink:

"you are only seeing the amplitude represented by the summation

"you are only seeing the amplitude represented by the summation of the frequencies at that point in time. The wave you are seeing has actually nothing to do with the frequency present at all - only amplitue"

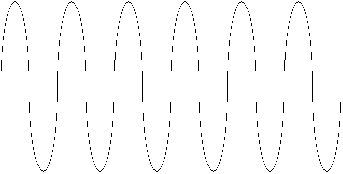

Sorry but this aint true. DAW's do show amplitude and frequency in the wave readout. Time and amplitude are displayed (amplitude is the vertical axis of the wave and time is the horizontal one). Sound is simply amplitude changing over time so the wave is the perfect representation. People in this post are calling the wave readouts in a DAW a "sine wave". This is technically incorrect. A sine wave changes in a linear fashion in time. The mixture of frequencies in any sound is reflected in the fact that the wave has random looking pattern. Zoom in on a vocal and you can spot the low frequency (long wavelength) of a vocal "pop" and the high frequency (short wavelength) of an "S" sound at the start of a word. This is basic physics.

Point taken though. You wouldn't be able to tell which frequencies would be out of phase by looking at them on your drum mics.

Lobe, Sorry, but this still isn't correct. While you are corre

Lobe,

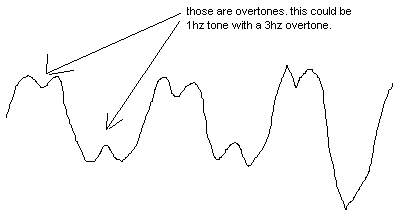

Sorry, but this still isn't correct. While you are correct, 'sine' wave is an incorrect term, individual frequencies, however are NOT represented in DAW windows. Think about it, if you sing a middle C, you would clearly be creating the overtones of C, CC, c1,c2,g2,c3,e3,g3,bflat3,c4 and many others. Are you telling me that, in that case only the c1 is represented? Well, then the plot being displayed is a summation of all included frequencies. Only in electronic music is it possible for one frequency to exist by itself. In all other scenarios, you have overtones. Not to mention the fact that you are likely picking up more than one instrument.

If you were to look at a wave that looks like this: (The extra dots are only there to make it look right)

........ /

____/...___/

.........../....../

........./

Then what you are telling me is that the first wave, because it is longer than the second, is therefore lower in frequency? BS! In the real world, this would be true. But we are not dealing with the real world here. You can't represent all wavelengths in a given recording at the same time!!!!! IT IS PHYSICALLY IMPOSSIBLE for the eye to comprehend all of the frequencies represented.

I understand that a lot of you are holding onto the notion that a wavelength, when drawn, should represent the frequency based on the distance between symmetrical points. While that is true for a single frequency, how in the H#LL do you expect to represent 8,000 frequencies in one display window? Think about it!

If I record an orchestra with 2 mics, at any given point in time, those two mics may very well be picking up every frequency from 20-20,000 Hz and then some (or then a lot more!). You mean to tell me that, the one little line on the screen showing me a small wavelength is the piccollo cuz it's short and narrow. Come on people, use your heads.

Again, let me state this for the record and make it clear ----

DAW wave displays ONLY show the amplitude of a signal based on the summation of all of the frequencies present at that point in time. The X axis of this equation is simply how that amplitude is represented on a time based scale.

One more quick example - a 20,000 hz wave form would be about .00005 seconds in length and a 20 hz wave form would be about .05 seconds in length based on simple math. So, since most of the "wave forms" represented in a DAW window are much closer to the .05 length than the .00005 length, does that mean that everything we listen to is made up of content exclusively in the 20-100 Hz range?

Okay, since I just can't let sleeping dogs lie - one more example. Use your pencil tool in any editor worth its salt. What can you fix? Only overs and clips. Have you every actually changed a pitch with the pencil tool? No? I didn't think so. Because, once again, you are only seeing amplitude NOT individual frequency.

Sorry for the rant,

J...

Well I don't mean to squabble over this but the longer waves do

Well I don't mean to squabble over this but the longer waves do indicate low frequencies and the shorter ones do indicate higher ones. This is a fact. The two waves you show do look like an ocatve apart and the lower one being roughly twice as loud. Of course you can't spot which little squiggly part of the wave is a piccalo amongst the sound of an orchestra but looking at a sample of a piccalo against some sub bass samples I would expect to see contrasting wave patternes (generally short and long) for these instruments.

Lobe wrote: Well I don't mean to squabble over this but the long

So how do you account for the thousands of frequencies present at any given point - there certainly are not thousands of frequencies represented in a DAW window...

I didn't read the whole thread, so at the chance of looking like

I didn't read the whole thread, so at the chance of looking like a complete idiot, here is my take. (I look like an idiot anyway ;) )

I thought the DAW display of a waveform would essentially represent what the speaker would be doing (or what the mic's diaghram was doing when it captured the analog signal)? A speaker's cone can obviously NOT be in 2 places at one time, yet it is able to play pink noise and such.

Once you add more than one "pure tone", you will STILL have one waveform, but with the higher tone "riding ontop of" the lower tone (also increasing the RMS level of the composite waveform). So you can see the low tone as a longer wave, and the high tone is represented by ripples within this low tone, right? So, you can NOT see each individual frequency, but the overall effect of the constructive and destructive addition of the individual pitches (or addition of multiple tracks). OK - Now tell me how I was wrong :oops:

:cool:

Randyman, You are essentially correct - but again, it's import

Randyman,

You are essentially correct - but again, it's important to understand that ALL the frequencies cannot be represented. So while you are seeing a representation of frequency, it's a summed representation. And as you state, the cascading frequency creates an increase in amplitude.

Imagine if two frequencies are being performed simultaneously that are only 2 Hz apart. One couldn't ride on top of the other in the window, and it certainly would not draw both - hence the summation of the amplitude of the frequencies.

Thanks,

J...

Got it. Kind of like trying to "see" all of the frequencies of

Got it.

Kind of like trying to "see" all of the frequencies of a guitar by simply watching a guitar string vibrate? (Which, of course, you can not see). Or, like standing in a room with a guitar AND a bass. You will hear both at once, but you will be hearing whatever constructive and destructive interference occurs at your ears (only hearing "one" waveform, but portraying 2 distinctly different waveforms)

Cucco is one cucco-ly-coolio dude :)

hey guys, I read an article on phasing which stated that if you

hey guys,

I read an article on phasing which stated that if you are constantly working in the audio field, production or whatever, you should check wiring specs on new leads and equipment you buy to make sure they all conform to the one standard, curious to know, does any body do this as common practice, or do most guys just assume that most manufacturers agree on what is considered "industry standard" and build according to this protocol.

Has anyone had some funny encounters??

I must confess, I have never checked any of the gear I have purchased, or leads for that matter to see if they all conform with one another, maybe purchasing "pro" products makes us think,

" hey, I dont have to check, they know what they are doing.."

Sammyg

emm Cucco (please dont flame me, im only 20 and still learning b

emm Cucco (please dont flame me, im only 20 and still learning by asking silly questions)

its not possible to make a visual representation of everything that happens in the air and is caught by a microphone. of course not.

but one thing i dont get:

why would anyone want to have a perfect graphical representation of what happens in their DAW?

i mean to ask:

if you want to phase-align in your daw (cuz i think thats what started this thread) by shifting tracks so that they graphically align (at the lowest zoom-in-level)

wouldnt it be enough to just nudge (either by hand/eye or by delay plugins/tools) the beginning of each track/sound?

assuming that the instrument you're recording and the microphone are stable, (thus not moving back or forth from the source)

that would mean that all of the track would be in phase with the other.

also, assuming that frequency is in fact the same as amplitude (freq. is periods (thus amplitude) per second. doesnt the daw-interface in fact give us a representation of phase, i mean if you graphically align the amplitudes of two tracks, doesnt that mean you have the phase aligned as well?

am i missing something?

i guess essentially my question is: if i align a track to another that both have a lot of different frequencies, and i make sure that the beginning of each track starts graphically at exactly the same time, isnt it true that then they are in phase correctly with eachother

bwaagghh i guess this post wasnt as clear as i wanted it to be

sorry

Thomaster: Hey man, good question. Don't worry, I won't flame

Thomaster:

Hey man, good question. Don't worry, I won't flame you. Do I have that bad of a reputation already?

What you're asking about is essentially what I tried to dispell in the original post. Remember, the wave form displayed is one of amplitude and is an attempt to "combine" all frequencies into one wave form. As Randyman suggests, you wouldn't be able to see all the frequencies by the pluck of a guitar string, but you do get an overall idea of the sound by how much it vibrates.

That being said, since the wave represents amplitude and not frequency (despite a lot of peoples desire to hold on to this misconception) peaks and troughs aligning does not an in-phase signal make.

Truthfully, if you use some basic math and your ears, rather than depending on a rather sloppy visualisation, you will find that signals in general are easy to phase align.

J...

why are you attemting to combine the frequencies in one waveform

why are you attemting to combine the frequencies in one waveform?? they are already in one waveform.

i hope you know this already. but this wave:

plus this wave:

becomes a combination of both.

example:

Gnarr, You are absolutely correct. However, try doing that wit

Gnarr,

You are absolutely correct. However, try doing that with thousands of frequencies - it doesn't look so pretty now. That's where the limitation of the DAW is. It tries its hardest, but falls short. Yes, it attempts to draw frequencies, but the fact is, not all frequencies are represented.

Thanks,

J...

haven't ANY of you guys heard of a fourier transform?

haven't ANY of you guys heard of a fourier transform?

Thanks Recorderman. The theory is that the digital sampling p

Thanks Recorderman.

The theory is that the digital sampling process takes a snapshot of the sound pressure wave (as picked up by the mic for instance) every 44.1 thousanth of a second. If you have a DAW with an actual sample level visual display and you can zoom in close it becomes more obvious that you are seeing a visual model of exactly this data. With samplitude for the PC you can zoom in to make 4 samples fill a 17 inch monitor.

You'd have to be a seriously autistic freak to be able to make sense of these waves but the theory holds that all the information is there. All the tiny detail of the noise floor waveform is there superimposed on the larger wave of the instrument you just recorded but of course it's impossible to tell it's there just by looking at it. But it is there.

Look here for how the average DAW fish fin wave display breaks down into it's componant frequencies (of which there are many quiet ones and a few dominant ones)

http://www.bores.com/courses/intro/freq/3_spect.htm

The maths for this is mind boggling.

If you look at a painting of a tree you see a tree. Look closer you can see the shape of the leaves. Analyze it with special gear and you could probably work out what the weather was like last winter and a few thousand other things despite the fact that to the eye it still just looks like a tree. The same holds true of these random looking waves. The devil is in the details.

thats true but still cucco is right (after i read his post i agr

thats true

but still cucco is right (after i read his post i agree:))

Fast Fourier Transform (FFT) on the contrary, displays a lot more information, like; the amplitude, length and frequency of all the different partials (overtones and fundamental) It is something that all daw's should have in their GUI's as an option.

it holds way more info than the standard displays in Logic, protools, etc..

that's not what I was alluding too..but this is ALL crazy. Just

that's not what I was alluding too..but this is ALL crazy. Just use your ears..you have MORE than enough info to make records, just do it.

you're TOTALLY right. :oops: but its nice to test my knowledge

you're TOTALLY right.

:oops:

but its nice to test my knowledge here. although it makes me look like a snob-wannabe :-?

afaican remember the original sound designer program from digi h

afaican remember the original sound designer program from digi had that option where you could display a portion of the sound file you were working on in fft as a 3d visualization. i found this pretty useless, though. i think there are better concepts now including that one software that visualizes it using 2d diagrams in color. makes more sense to me this way...

yeah, the latter was the one i was referring to. its pretty hand

yeah, the latter was the one i was referring to.

its pretty handy to have that in a DAW.

it saves a lot of time for me

From the original post: Remember - 1 foot = .88 milliseconds or

From the original post:

Remember - 1 foot = .88 milliseconds or 1 foot = .02 samples in a 44.1khz sampled track.

I think you mean that 1 ft = about 50 samples. There's a "one-over" error in there somewhere!

Mike

MikePotter wrote: From the original post: Remember - 1 foot = .

Thanks Mike. You're absolutely right.

Of course, this is also dependent upon the frequency too.

Not to change the direction of the subject but I'm sill confused

Not to change the direction of the subject but I'm sill confused a bit. So is it wise or not to time align tracks in the daw? I usually zoom in on my drum tracks and align the overheads and rooms with the kick and snare. It seems to tighten up the bottom end. Also, I use a sub mic and a d112. (sub mic is the speaker mic) when I zoom in the d112 being closer to the beater is recorded sooner than the sub so I time align them. Again tightens up the lows. I always go thru and toggle all the phase buttons on all the drum tracks in the end and just go with what sounds best. Any direction would be aprreciated.

The question posed by J-3 above is what I've been thinking as I

The question posed by J-3 above is what I've been thinking as I read this very informative thread. Why does the sound tighten up when I align 2 kick drum mics then? Is it because the relatively simple kick drum info is easier to "see" and represented more clearly by the software? ...as oppossed to the Ochestral example that started this thread?

Pagination