Been experimenting with using two mics on my acoustic - an SM57 (dynamic), and a single RODE M5 (a condenser). I generally blend these (with the 57 doing most of the work) but the RODE still contributing.

The 57 is generally up pretty close -anywhere from 8 to 12 inches, and the RODE is about twice that distance back... As I play around with this, is there a way to check for phase issues in the DAW ? Up to now I've been just moving them around until I find something that sounds good -- I play with the blend -- then I toggle the track to mono to see how it sounds there --- Not entirely sure how obvious phase issues will be. How paranoid about phase should I be?

Also - Is phase more a concern when using multiples of the same mics in a close mic scenario? Like am I generally gonna be okay with any random forward/backward placement here or are all the checks I'm doing necessary.

Many thanks

Comments

The 3:1 rule really only applies when you are considering the bl

The 3:1 rule really only applies when you are considering the bleed between different sources or different sections of a wide source covered by several mics. The video example of miking a choir is a good illustration. The 3:1 rule works by having the amplitude received by each mic of the sound covered by another mic being sufficiently lower in amplitude such that phase problems, although inevitably present, are reduced to an acceptable level.

When you put two or more microphones on a single source such as a guitar cabinet, the 3:1 rule does not usually apply, unless the further mic is a "room" mic to capture room reflections, and is present only at a relatively lower level in the mix. Close-miking or close/medium miking a guitar cabinet specifically with two different mics whose outputs may be of similar level in the mix is normally done to create a certain sound that incorporates the phasing effects between the microphones. If you like the sound you are getting, keep it, and forget the rules and the theory.

In your case, you are using a pair of microphones that are both pressure-sensitive, so the electrical output of the two has the same phase when presented with the same acoustic waveform. However, just for completeness, there is an added complication when using a velocity microphone such as a fig-8 ribbon in conjunction with a pressure-sensitive microphone, as the output from a velocity-sensitive microphone is automatically 90 degrees out of phase with that from the pressure-sensitive microphone. This phase difference cannot be corrected by simple polarity reversal, and so either has to udergo special phase-shifting processing or is left to become part of the mix sound. It's also where several people, including some top professionals, trip up when electing to add a fig-8 ribbon to a cardioid microphone to form an M-S array. The result of this can never decode correctly to L-R, but will produce a sound that is different from an X-Y array and may be judged acceptable on the particular sound source.

In addition to what Bos has so eloquently ( and as usually) expl

In addition to what Bos has so eloquently ( and as usually) explained.. to answer your original question, when working with multiple mics on multiple sources together or in close proximity to each other, you can always use the tried and true method of monitoring the mix - or those multiple mic'd sources in question - in mono. If after switching to mono, you hear something dramatically attenuate, drop out, or even disappear, then you've got a phase issue.

FWIW

Sorry for the interruption.. and now back to our regularly scheduled program. :)

Boswell makes an excellent point, that I don't think gets talked

Boswell makes an excellent point, that I don't think gets talked about enough. I see lots of posts from people paranoid about phase issues, but not much discussion about why you would put two different microphones on the same source at two different distances. It's often done to create 'size' and give the sound some depth-of-field, to use a camera analogy. The subtle, or not so subtle, differences in phase provide information to your ears -> brain about the space the sound lives in and how dominant the sound is within that space. In the real world our ears are positioned to help us triangulate the location of the sound. The phasing that occurs naturally in reflected sound in our environment helps us innately determine walls, boundaries, distances. Where there is distance, there is delay and some amount of acoustical phasing. So sometimes it's appropriate and necessary to re-align things, but sometimes not being perfectly aligned is what creates the desired sound and gives the recorded source some natural size and character.

In conjunction with that, there's also the issue of "Signal to Noise", which a lot of us automatically associate with a spec. sheet number, that expresses the inherent noise within an audio devices combined circuitry. In acoustic space the same principle applies. Even on a live stage recording, an SM57 jammed up against the grill of a loud Marshall cabinet is going to 'hear' very little else while the guitarist is playing. There will be some minimal bleed or outside noise, but it's a very small ratio of bleed compared to the Marshall's overwhelming signal. In a studio you might have another mic 10-20 ft. away from the cab., which you're inviting to hear more of the room. You're putting the mic there presumably because you've found a sweet spot with regards to the balance between the amp and room. Even if the 2nd mic was also an SM57 it will give you a sense of the room. Swap that out for a more sensitive condenser mic and now you're picking up even more details about the room - which may be a good thing, or not. It certainly compounds the number of variables.

Now you've got the close-mic track on your DAW and a room-mic track on your DAW. If you push the room-mic track forward the required milliseconds to offset the distance from the close-mic you've created something that may sound great (or not). The combined and re-aligned guitar might get 'thicker', but lose 'bigness', and if taken too far it can sound fake because it's a sound that can't exist in nature. You just can't have it both ways in nature, but in recording-world it's, "screw you nature!! I've got Monster Guitars to mix". And of course there are times it just isn't prudent to stick your U87 inside the port of a kick drum, but you don't want to introduce natural delay into the tracks, so put your U87 where it sounds great (a safe distance away) and put a purpose-built kick drum mic in the port and nudge those tracks into alignment. (And then maybe drop the kick drum mic out of the mix completely)

So the key bullet-points are: Use your ears. The only rule is, how does it sound? If it SOUNDS right, it IS right. Experimentation takes time, but it's the only way to improve. Use your ears. What worked last time may be a good place to start, but may not sound as good this time due to any number of variables. Oh yeah and, use your ears.

Happy Recording!

It's another classic version of the following rules blindly situ

It's another classic version of the following rules blindly situation, rather than your ears. Rather like when people double mic snare drums. The book says you must reverse the polarity of one, but when I have forgotten, and then shove two faders up, sometimes it sounds really good with both the right way around. Phase isn't like the sine waves we all use to explain it, where the inverted one cancels out completely. The more distant one might have more harmonics and they interact.

Two mics on an acoustic will be different sounding. The murky boomy one, and the finger swooshing one on the neck. Mix those together and you can change the whole sound. You'll have some cancelation and some combining - you might get comb filtering, which you might even like. Do it, then listen, and if you hate it - sort it! If you like it, keep it. I only get real problems when I point two mics at the same source, from the same distance and have a reversed cable.

One thing to remember.....out of phase does not always = bad. Yo

One thing to remember.....out of phase does not always = bad. You can occasionally obtain a very nice recording with a mic/s out of phase. And, simply monitoring the input can tell you a lot. Move the mic and/or instrument around the field with the mic in and out of phase. It's pretty amazing how much difference 1/2" can make, in or out of phase. That is all.

One thing that has not been mentioned: If two mics at different

One thing that has not been mentioned: If two mics at different distances are panned apart (as the OP indicates) the tone will change when the mix is played in mono So the problem is more complicated than a simple matter of the combined tone, it's a possible change in tone under different playback circumstances. I would probably address this by time-aligning the two tracks, moving the far mic's track to the left on the timeline.

That illustration in the video is wrong. The distance between the mics is not meaningful in the context of the 3:1 rule. It's all about the ratio of the distances between mics and sources. The x3 distance should be between the subject and mic 2.

When dealing with phase it's the absolute time or distance difference that matters, and the ratio of that difference to the same property of the wave (time or distance between peaks). The ratio of the two mic distances is irrelevant.

I'm totally new to phase concerns during a recording process, so

I'm totally new to phase concerns during a recording process, so forgive me if this is a dumb q...

Leave the video example for the moment,...multiple sources, multiple mics, I can see where phase problems may occur.

But if you have a single source like a guitar, a mic at 10" and a mic at 30", do phasing problems often occur?

I have no idea, I'm genuinely asking. Depending on the gain structure (assuming both channel strips are the same) wouldn't it be almost imperceptible? Sound travels roughly 13,512" per second...is it common for a 20"-30" difference to introduce phase issues into the recording with a single source?

Brother Junk, post: 441923, member: 49944 wrote: I'm totally new

Brother Junk, post: 441923, member: 49944 wrote: I'm totally new to phase concerns during a recording process, so forgive me if this is a dumb q...

Leave the video example for the moment,...multiple sources, multiple mics, I can see where phase problems may occur.

But if you have a single source like a guitar, a mic at 10" and a mic at 30", do phasing problems often occur?

I have no idea, I'm genuinely asking. Depending on the gain structure (assuming both channel strips are the same) wouldn't it be almost imperceptible? Sound travels roughly 13,512" per second...is it common for a 20"-30" difference to introduce phase issues into the recording with a single source?

We had a thread about this earlier this year.

If I'm doing the math right (no guarantees on that) 20" will can

If I'm doing the math right (no guarantees on that) 20" will cancel 338Hz. How much would depend on relative levels in the mix. Ultimately it's your ears that have to be the determining factor, but I would probably end up nudging the far mic track into alignment with the close mic.

MOVE THE FREAKIN' MIC for crise sake! sliding tracks is not th

MOVE THE FREAKIN' MIC for crise sake!

sliding tracks is not the same thing as placing your mics so they are in phase. you need to move the mics into the correct place. otherwise you are just fixing a problem you shouldn't have in the first place. this is one of the things i dislike about DAW recording. too many ways to fix things that shouldn't happen in the first place.

"Oh don't worry about that! I'll just fix it in the mix." ...... yuch!

it's simple. put both mics up in mono. flip the phase of one of the mics. does it sound fatter or thinner? thinner is out of phase. also check in mono to be sure the two mics are not canceling each other out. it happens.

pre amp doesn't have a phase button? then you need to get an xlr adapter (or a better pre amp). the good pres all have phase flip buttons. it's a necessary tool.

Totally agree - I can't ever in my entire and quite long life do

Totally agree - I can't ever in my entire and quite long life done any MATHS! Cancellation can happen when both mics are equidistant but this is an established technique. If the mics are further away from the same source then there will be time delay too, so the effect is different. The two mics will be capturing different areas of the sound source that have different timbres. Two mics can work nicely in stereo creating some artificial width.

Your ears will tell you if you've set them up in the cancellation position because it will sound naff. If it sounds bad, move them, if it sounds good use them! Forget maths and calculations, the theory doesn't take real world practicalities into account.

Kurt Foster, post: 441926, member: 7836 wrote: MOVE THE FREAKIN'

Kurt Foster, post: 441926, member: 7836 wrote: MOVE THE FREAKIN' MIC for crise sake!

sliding tracks is not the same thing as placing your mics so they are in phase. you need to move the mics into the correct place. otherwise you are just fixing a problem you shouldn't have in the first place. this is one of the things i dislike about DAW recording. too many ways to fix things that shouldn't happen in the first place.

"Oh don't worry about that! I'll just fix it in the mix." ...... yuch!

it's simple. put both mics up in mono. flip the phase of one of the mics. does it sound fatter or thinner? thinner is out of phase. also check in mono to be sure the two mics are not canceling each other out. it happens.

pre amp doesn't have a phase button? then you need to get an xlr adapter (or a better pre amp). the good pres all have phase flip buttons. it's a necessary tool.

In/out of phase at what frequency? The only way to get them truly in phase is to use to identical mics at identical distances, which is pointless. The whole point is to have some kind of difference between the mics. It's a matter of what kind of difference you want, what sounds good. Placing them at different distances means they pick up the room and source differently. Maybe that's the good part and the arrival time difference is the bad part. Maybe the cancellation you get from the different arrival times is the good part. They are two different and valid approaches. Time alignment is not "fixing it in the mix", it's a useful technique made possible by digital mixing.

Inverting polarity is a treatment, not a cure. Do you understand the difference between phase and polarity? They are closely related but not the same.

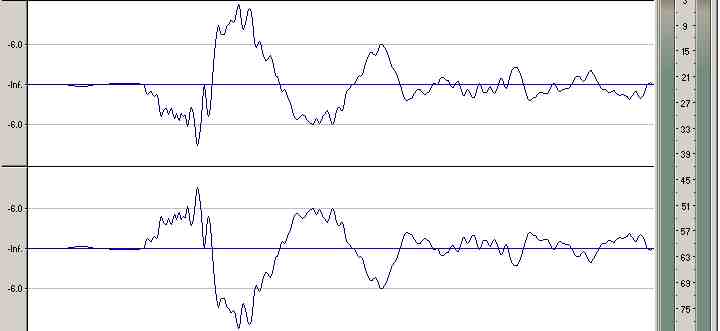

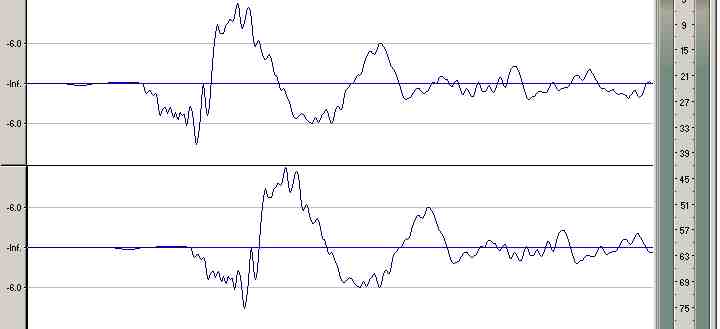

Phase is the result of two identical waves that are offset in ti

Phase is the result of two identical waves that are offset in time. This can be simple delay that is the same for all frequencies or vary with frequency due to things like filters. How much phase offset there is in degrees for a given amount of delay depends on the frequency in question. For audio signals polarity has only two states, in or out, with no intermediate condition. Phase occurs in the time dimension while polarity occurs in the amplitude dimension. Here's a pair of simple illustrations, polarity first, delay (that causes phase differences) below.

http://www.mixonline.com/news/profiles/armin-steiner/372804 Arm

http://www.mixonline.com/news/profiles/armin-steiner/372804

Armin Steiner. He developed a phase correction network for each channel of his recorder. here's an article from Mix online. it's a good read

Correct me if I'm wrong, there is no such thing as perfect phase

Correct me if I'm wrong, there is no such thing as perfect phase unless you duplicate a track in your daw..

Many gets sick about phase when the best thing to do is to listen and move mics around until it sounds good. In fact, it's better than calculating the positions because you can hit a spot where it sounds better but you'd never try it otherwise.

Phase displacement can be our friend too, too me it's the first EQ in the signal path...

One aspect people forget is that even with tracks recorded at different point in time, you can have phase issues. The best exemple is when stacking guitar tracks.

I like having 2-3 tracks to work which I'll pan L C R, but more than that creates a mess quickly.

Boswell, post: 441924, member: 29034 wrote: We had a thread abou

Boswell, post: 441924, member: 29034 wrote: We had a thread about this earlier this year.

The link brings be back here lol. I'm not sure if that was a joke or a mistake. Either way, I appreciate it lol. But I will search for it. I'm aware of the 3/1 rule, but I've never recorded with more than one mic.

pcrecord, post: 441958, member: 46460 wrote: Correct me if I'm wrong, there is no such thing as perfect phase unless you duplicate a track in your daw..

I'm pretty sure you are correct. If we wanted to get technical, it's not a benchmark or standard, it's a property. So, "perfect" isn't applicable, unless you are talking about the phase relationship of one track to another, which would never be identical, unless you duplicate a track like you suggested. So, correct me if I'm wrong, but I'm pretty sure what you said, and what I just typed are correct.

You guys have a different understanding of it than me. I have never even thought about it in the recording phase (pun intended). But fwiw, pcrecord...unless the song is just one single instrument, recorded with a single mic, it seems that "perfect" isn't theoretically possible. It's just that the differences may be imperceptible or a non factor.

Also, once you have more than one track or mic, phase becomes relative, no? Once you exceed one instrument, one mic, you have more than one phase orientation, however slight. It may not be a problem, but it's probably there. And someone please correct me if I'm wrong.

If you listen to Fortune Empress of the World on a quality setup, we'll say tweeters, mids, bass driver, and sub. If you are sitting in perfect center, and I invert the phase of the tweeter or mid (not polarity, phase) on the right side, the background voices can gain separation and clarity. It can recess further into the sound stage. The rest of the song can seem mostly unaffected. If you do the same to the left side it will cause different perceived differences, maybe making the voices more muddy. I know that track has multiple voices, so maybe that is why it works this way on this track. All the above is dependent obviously on crossover points/slopes etc. Every 6db of slope = a 90* phase shift for the frequencies after the x point. So, the phase at differing hz is also being altered, (unless it's a 24db/lwr slope). But, the HS8's and some others, have made sure that's not a factor in the monitors. So perhaps what I'm talking about isn't even applicable. Because I'm talking about reversing a driver's phase, not a track. I'm talking about post recording, you are talking about pre recording. But my point is, and I'm pretty sure it's correct, that with multiple tracks, phase is lo longer absolute (if it was ever absolute) it's relative, one track to another. The differences may be stark, or imperceptible.

I guess that's what I mean with my "sound travels app. 13,512" per second" question... Is a 20" difference really going to introduce any type of significant phase issue? Because almost everything is going to have slight phase differences. Even the fact that it's a different mic, could introduce imperceptible phase differences no? Some EQ plug-ins will introduce greater phase differences. So the phase difference will be there, but may not be a problem....

So for the op's q, I'm not suggesting that 20" can't introduce phase problems...I'm asking you guys who do this so much because I'm curious. Can phase issues be that stark with just a 20" difference? And I mean in practice, not theory. Bc in theory, they WILL be different.

Brother Junk, post: 441959, member: 49944 wrote: But fwiw, pcrec

Brother Junk, post: 441959, member: 49944 wrote: But fwiw, pcrecord...unless the song is just one single instrument, recorded with a single mic, it seems that "perfect" isn't theoretically possible. It's just that the differences may be imperceptible or a non factor.

That's exactly what I was saying ;)

We may add, unless the 2 or more instruments recorded in different rooms or different times don't share the same frequency properties.. but thats over the board and it doesn't happen with music..

I respect those who calculate and can aim for the right spot the first time.. I DO !

I'm just too lasy for this. I prefer putting the mics and listen to the result. The only exception would be when tracking a drum, I try to have the overheads at the exact same distance from the snare and if possible the bassdrum as well.

Dave Rat on Youtube has a great video where he sends pink noise

Dave Rat on Youtube has a great video where he sends pink noise to one side of the headphones and the pink noise in the other can be varied by quite tiny delays in time. It's very interesting how such tiny delays can be detected - just a few mS makes a difference you really can hear - and with the pink noise, the shift in your head is much more obvious than plonking two mics on a guitar.

Learn how to properly set up your microphones using the 3 to 1 r

Learn how to properly set up your microphones using the 3 to 1 rule. Following this mic technique will ensure proper recording when using multiple microphones. See the diagrams in the videos for a clear image of exactly how the rule works.