How do you avoid comb filtering effects where there are singers or instruments with microphones spaced closely together? Often in situations with limited performance space, it is difficult to adhere to the 3:1 rule.

Comments

AUD10, post: 459336, member: 23483 wrote: How do you avoid comb

AUD10, post: 459336, member: 23483 wrote: How do you avoid comb filtering effects where there are singers or instruments with microphones spaced closely together? Often in situations with limited performance space, it is difficult to adhere to the 3:1 rule.

The comb filtering you refer to is due to sound from one source being picked up by a second microphone at a different distance from the source. The sound arrives later at the the further microphone, and the resulting time delay can create destructive interference in the mix. For there to be audible comb filtering, the relative amplitudes of the two signals should be less than about 10dB, a figure that is the justification of the crude 3:1 distance rule, as the sound intensity level falls off with the square of the distance.

It's usually not possible to avoid some bleed between microphones set up for different performers in the same performance space. The best way of dealing with it is not to avoid it at all costs, it's to keep it to an acceptable level through careful choice of microphone type, pickup pattern and positioning.

The wavelength of a sound wave is proportional to the reciprocal of its frequency. Ignoring dispersion and other effects, the velocity of sound in air is independent of frequency.

pcrecord, post: 459337, member: 46460 wrote: You see frequencies

pcrecord, post: 459337, member: 46460 wrote: You see frequencies moves in different wave lengths in the air and therefor they travel at different speed. A cancellation occurs when using 2 or more mics and the sound hits the mics at different time which results in inverted polarity of a frequency.

The closer your mics are should result in less cancellation

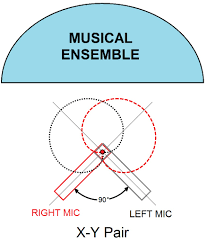

One way is to put the source at equal distance of the microphones. A X/Y technic is easy to do and give great results. (better done with 2 identical mic/preamp)

Comb filtering is an interesting effect. There are two types of comb filtering, feed forward and feed back. For those interested in the mathematics behind comb filtering, the z transform is handy for resolving both situations. The simple z-transform (discrete time) analysis assumes the modeled effect of two sources combined to monaural (where one can hear the effect of phasing, etc.) using a difference equation y[n] = x[n] + ax[n-k], where y[n] is the output (mono), x[n] is the untransformed input reference (i.e. mic 1), and the second input (i.e. mic 2) ax[n-k] incorporates delay [n - k] where k is the delay length in samples and a is a scaling actor of the delayed signal (i.e. two separated mics in A-B).. The result of the z transform analysis shows maxima and minima; applied to audio, these maxima and minima are audible depending on conditions.

Those of us who remember the early stereo days recall that A-B recording invited comb filtering, the "hole in the center" effect, etc. The use of a center microphone, equally spaced between the left and right microphones and mixed into both channels, took care of the "hole in the center" effect. The x-y pair pictured above virtually eliminates the acoustic delay phasing between the microphones. The x-y pairing combines to mono quite well. This assumes both microphones are wired in phase.

The analysis could be applied to M-S recording; since the microphones are wired out of phase with different directional patterns, there is a phase factor of 180 degrees plus scaling that must be incorporated to create a correct difference equation.

rmburrow, post: 462203, member: 46233 wrote: Comb filtering is a

rmburrow, post: 462203, member: 46233 wrote: Comb filtering is an interesting effect. There are two types of comb filtering, feed forward and feed back. For those interested in the mathematics behind comb filtering, the z transform is handy for resolving both situations. The simple z-transform (discrete time) analysis assumes the modeled effect of two sources combined to monaural (where one can hear the effect of phasing, etc.) using a difference equation y[n] = x[n] + ax[n-k], where y[n] is the output (mono), x[n] is the untransformed input reference (i.e. mic 1), and the second input (i.e. mic 2) ax[n-k] incorporates delay [n - k] where k is the delay length in samples and a is a scaling actor of the delayed signal (i.e. two separated mics in A-B).. The result of the z transform analysis shows maxima and minima; applied to audio, these maxima and minima are audible depending on conditions.

Those of us who remember the early stereo days recall that A-B recording invited comb filtering, the "hole in the center" effect, etc. The use of a center microphone, equally spaced between the left and right microphones and mixed into both channels, took care of the "hole in the center" effect. The x-y pair pictured above virtually eliminates the acoustic delay phasing between the microphones. The x-y pairing combines to mono quite well. This assumes both microphones are wired in phase.

The analysis could be applied to M-S recording; since the microphones are wired out of phase with different directional patterns, there is a phase factor of 180 degrees plus scaling that must be incorporated to create a correct difference equation.

Great post, its interesting how math relates to audio and acoustics so well. Wish i was better at relating the two. Thanks.

kmetal, post: 462206, member: 37533 wrote: Great post, its inter

kmetal, post: 462206, member: 37533 wrote: Great post, its interesting how math relates to audio and acoustics so well. Wish i was better at relating the two. Thanks.

One of the most useful math/sound ideas is inverse square law. Double the distance, quarter the power. Halve the distance quadruple the power. A 4:1 power ratio is 6dB. When I'm recording a group in the studio I'm always thinking of this, then adding in polar response and goboes.

bouldersound, post: 462207, member: 38959 wrote: One of the most

bouldersound, post: 462207, member: 38959 wrote: One of the most useful math/sound ideas is inverse square law. Double the distance, quarter the power. Halve the distance quadruple the power. A 4:1 power ratio is 6dB. When I'm recording a group in the studio I'm always thinking of this, then adding in polar response and goboes.

Yeah thats a good one, applying this stuff is something i would like to learn. The inverse square law reminds me of the mass law in studio building, where you double the mass gain 6(12?) Db, then you need to double again to get the next 6db. So you end up 2 sheets then 4 then 8. I think its logarithmic or exponential math stuff. Its crazy what the Db meter is telling you in math, and how universal this stuff is.

If there is a book like "math for audiots" or something that shows you how to apply the math to things, it would be great. Ive read at least a dozen acoustics books and they are either full of equations or contain none. It would be great to have a book say like use this method to estimate how many db your bass trap will absorb. Or relating sonics to the math in gear. Like if you build a pre amp or eq, how the components values relate to the sound. Like if your pre sounds 3db to much at 1khz change this components value.

Sorry to rant of go off topic.

Inverse square law is pretty simple. Get the mic close to the th

Inverse square law is pretty simple. Get the mic close to the thing you want to capture and far from anything you don't want to capture. It's the basis for the 3:1 rule of thumb, in that if the mic is three times as far from the unwanted things as the wanted things you'll get about 9dB of isolation, plus whatever you get from exploiting off axis rejection. The diagram I posted above illustrates that.

In live sound, doubling watts (power) gets you +3dB. At low frequencies you can exploit boundary effect for increased output. A sub on the floor is already 3dB above a sub suspended away from any boundaries. Putting it against a wall gets you an additional 3dB, and putting it in a corner gets you yet another 3dB. The effect tapers off as you go up in frequency.

Any speaker trends toward omnidirectional at lower frequencies based on the ratio of the wavelength to the radiating area. Once the wavelength gets big enough (I forget if it's 2x or 4x), the speaker becomes omnidirectional. Because of this, multiway speaker systems are often described as having a "christmas tree" dispersion pattern, narrow at the top of each band and widening toward the bottom of the band, then transitioning to narrow again at the top of the next lower band.

Frequency based directionality also happens on the receiving end. The distance between the ears makes humans essentially unable to perceive the precise direction of frequencies below about 300Hz. That's why I high pass [Edit: the difference channel on] mixes when I have to pan LF elements.

A lot of audio math is geometry and proportion. Comb filtering is due to the proportion between the time offset and wave interval (which can also be expressed in distance when talking about actual sound waves).

That is great info !! Altought, we should not forget that phase

That is great info !!

Altought, we should not forget that phase cancellation isn't all evil. It can be a good tool to cancel unwanted frequencies and Serve at your first EQ before hitting the preamp ! ;)

Fab Dupont from puremix.net once said ;

-"Place the mics so it sounds good"

-"Is it in phase ? Who cares if it sounds good!! "

bouldersound, post: 462214, member: 38959 wrote: That's why I high pass mixes when I have to pan LF elements.

Have you thought of making the lowend more centered instead ?

One exemple of plugin that can do this :

bouldersound, post: 462207, member: 38959 wrote: One of the most

bouldersound, post: 462207, member: 38959 wrote: One of the most useful math/sound ideas is inverse square law. Double the distance, quarter the power. Halve the distance quadruple the power. A 4:1 power ratio is 6dB. When I'm recording a group in the studio I'm always thinking of this, then adding in polar response and goboes.

The comb filtering problem can be modeled using regular trigonometry, but the equations get messy. The discrete time analysis (z-transform) allows for changing n (sample number) and a (a scaling factor incorporating amplitude, etc.) with much less clutter.

The velocity of sound at STP [standard temperature (25 deg C) and pressure (760 torr)] is approximately 340 meters/sec. (multiply this by 3.281 to get feet/sec.) The velocity of sound does NOT change with frequency. The adsorption (loss) of sound DOES change with frequency for a given distance from the source. Remember the HF boost in the Neumann M50 mic which was used to record orchestras, etc. at a distance in a large auditorium??

Kurt Foster, post: 462225, member: 7836 wrote: phase was far mor

Kurt Foster, post: 462225, member: 7836 wrote: phase was far more an issue with mono (AM radio) and vinyl. stereo and CD's changed that.

Phasing was an issue with AM radio for years when the record men brought stereo LP's and 45 rpm records cut in stereo to AM radio stations and the monaural releases were eliminated. Some of the techniques involved to obtain mono were good and some others (like wiring the cartridge L and R outputs in parallel at the cartridge) were "Rube Goldberg" at best. The easiest way to obtain satisfactory mono was to use a stereo preamp (so the cartridge was terminated properly) and combine the L & R outputs of the preamp using a resistive combiner and send the combined (mono) output to the console.

The old "rock and roll" days were interesting with the "more music" formats spinning the turntables at 47 rpm instead of 45 rpm, etc. and packing in more plays per hour. Yes, announcers actually spun records on the radio before tape cartridges (constant head alignment trouble) and CD's and other digital media took over. Stereo FM in the early days was also interesting. The early stereo generator/exciter units were vacuum tube and occupied approximately half of a 6 foot rack, and required constant attention. The new gear is much more stable; the exciter and audio processing occupies a few units of rack space.

Corporate consolidated ownership of large numbers of radio stations, combined with satellite programming (it's 20 min after the hour) has wrecked radio as some of us once knew it. Some listeners are smart; non-local origination (20 min after the hour announcements, etc.), automated programming, and getting a recording when a listener calls the radio station are tune outs. Like some of the garbage seen on television, the most outstanding feature of the radio or TV is the OFF switch...

pcrecord, post: 462220, member: 46460 wrote: Have you thought of

pcrecord, post: 462220, member: 46460 wrote: Have you thought of making the lowend more centered instead ?

Sorry, what I meant to say was that I high pass the difference channel, which is what you're suggesting. When mixing at home I use a plugin that doesn't have quite so much visual distraction as that. It's freeware called Basslane. At my friend's studio I use Brainworx bx_digital V2.

rmburrow, post: 462227, member: 46233 wrote: Phasing was an issu

rmburrow, post: 462227, member: 46233 wrote: Phasing was an issue with AM radio for years when the record men brought stereo LP's and 45 rpm records cut in stereo to AM radio stations and the monaural releases were eliminated. Some of the techniques involved to obtain mono were good and some others (like wiring the cartridge L and R outputs in parallel at the cartridge) were "Rube Goldberg" at best. The easiest way to obtain satisfactory mono was to use a stereo preamp (so the cartridge was terminated properly) and combine the L & R outputs of the preamp using a resistive combiner and send the combined (mono) output to the console.

The old "rock and roll" days were interesting with the "more music" formats spinning the turntables at 47 rpm instead of 45 rpm, etc. and packing in more plays per hour. Yes, announcers actually spun records on the radio before tape cartridges (constant head alignment trouble) and CD's and other digital media took over. Stereo FM in the early days was also interesting. The early stereo generator/exciter units were vacuum tube and occupied approximately half of a 6 foot rack, and required constant attention. The new gear is much more stable; the exciter and audio processing occupies a few units of rack space.

Corporate consolidated ownership of large numbers of radio stations, combined with satellite programming (it's 20 min after the hour) has wrecked radio as some of us once knew it. Some listeners are smart; non-local origination (20 min after the hour announcements, etc.), automated programming, and getting a recording when a listener calls the radio station are tune outs. Like some of the garbage seen on television, the most outstanding feature of the radio or TV is the OFF switch...

other problems; summing stereo with phase issues to mono can sometime cancel out some elements of the mix. example a guitar through a delay or chorus pedal that generates a "stereo" output. record that in stereo and then sum it to mono .... it will cancel out and you lose level or worse case it completely nulls out. this is why we used to ALWAYS listen to a mix in mono before printing it, to be sure the mix was still close in both mono and stereo. this was common practice even after stereo became defacto standard in the 60's, lasting well into the late 80's to insure compatibility in both TV and radio.

badly wired patch bays and incompatible pin out formats of the 70's / 80's also contributed to problems. with limited tracks it was standard practice that parts of a mix would be built in "packages" several guitars or vocal "stacks" of backing vocals summed to 1 or 2 tracks depending if it was 4 / 8 or 16 track. if you are bouncing tracks and every time you do a bounce the polarity flips and you don't know it you might be in for a surprise when you play that wonderful stereo mix you made in mono. "Hey! Wtf happened to the vocals?" hopefully it was before you pressed up a sh*tload of records! lol!

bouldersound, post: 462214, member: 38959 wrote: Inverse square

bouldersound, post: 462214, member: 38959 wrote: Inverse square law is pretty simple. Get the mic close to the thing you want to capture and far from anything you don't want to capture. It's the basis for the 3:1 rule of thumb, in that if the mic is three times as far from the unwanted things as the wanted things you'll get about 9dB of isolation, plus whatever you get from exploiting off axis rejection. The diagram I posted above illustrates that.

In live sound, doubling watts (power) gets you +3dB. At low frequencies you can exploit boundary effect for increased output. A sub on the floor is already 3dB above a sub suspended away from any boundaries. Putting it against a wall gets you an additional 3dB, and putting it in a corner gets you yet another 3dB. The effect tapers off as you go up in frequency.

Any speaker trends toward omnidirectional at lower frequencies based on the ratio of the wavelength to the radiating area. Once the wavelength gets big enough (I forget if it's 2x or 4x), the speaker becomes omnidirectional. Because of this, multiway speaker systems are often described as having a "christmas tree" dispersion pattern, narrow at the top of each band and widening toward the bottom of the band, then transitioning to narrow again at the top of the next lower band.

Frequency based directionality also happens on the receiving end. The distance between the ears makes humans essentially unable to perceive the precise direction of frequencies below about 300Hz. That's why I high pass mixes when I have to pan LF elements.

A lot of audio math is geometry and proportion. Comb filtering is due to the proportion between the time offset and wave interval (which can also be expressed in distance when talking about actual sound waves).

Well said. Very informative.

Kurt Foster, post: 462225, member: 7836 wrote: phase was far more an issue with mono (AM radio) and vinyl. stereo and CD's changed that.

I've heard some FM receivers (like car radios) switch to mono when they reach a certain range from the broadcast station, in order to improve reception. I guess mono summing boosts the signal somehow?

bouldersound, post: 462228, member: 38959 wrote: At my friend's studio I use Brainworx bx_digital V2.

Just fyi since you mentioned that pluggin specifically and we were discussing oversampling of pluggins in the other thread. I came across a thread where Dirk from Bx said "some of our pluggins use oversampling, the bx_digital v2 does not." The code is nearly identical on the native and dsp versions. Just fwiw.

Kurt Foster, post: 462229, member: 7836 wrote: other problems; summing stereo with phase issues to mono can sometime cancel out some elements of the mix. example a guitar through a delay or chorus pedal that generates a "stereo" output. record that in stereo and then sum it to mono .... it will cancel out and you lose level or worse case it completely nulls out. this is why we used to ALWAYS listen to a mix in mono before printing it, to be sure the mix was still close in both mono and stereo. this was common practice even after stereo became defacto standard in the 60's, lasting well into the late 80's to insure compatibility in both TV and radio.

badly wired patch bays and incompatible pin out formats of the 70's / 80's also contributed to problems. with limited tracks it was standard practice that parts of a mix would be built in "packages" several guitars or vocal "stacks" of backing vocals summed to 1 or 2 tracks depending if it was 4 / 8 or 16 track. if you are bouncing tracks and every time you do a bounce the polarity flips and you don't know it you might be in for a surprise when you play that wonderful stereo mix you made in mono. "Hey! Wtf happened to the vocals?" hopefully it was before you pressed up a sh*tload of records! lol!

Interesting stuff. Now stereo wideners and keyboards/vsti that are not true stereo are culprits of mono surprises.

Its amazing that mono still remains relavant as ever (to me at least) since now much is listened to on phones which aren't all stereo.

I have often had a wry smile when people talk about comb filte

I have often had a wry smile when people talk about comb filtering as a general concept and it's revealed as rather too complicated to sink in first attempt, or second or third in my head. The solutions always suggest workarounds and solutions that do work. Then I go to work and it all goes out of the window when you give actors omni-directional microphones and let them wander around a stage in a never ending series of slightly different distances. If you ever get a chance to sit next to the person mixing the live audio in a theatre with many open mics, you'll see most have developed fast fingers. The ones that do it best have fingers that never stop. None of the studio stuff where you set faders and sit back. Two people about to sing a duet, simplest case, so it's two faders, but as they get closer together, and the comb filtering physics starts to emerge, they'll start to 'play the faders', they are watching their microphones, and listening and predicting the too and fro of the song, bringing the two levels up and down in a kind of dance, sometimes even on syllables in the words. It's fascinating to watch, and I do know I am not good enough to do this. At first, I assumed these people were just over-reacting to the music and like when you see DJs flicking faders, you kind of assume it has no effect. Until, that is, you have to deputise and you suddenly notice that characteristic 'hollow' sound creeping in, and you knock the male fader down a little, and sort it, then the song changes and he takes the lead and you have to swap the emphasis, then they sneak even closer for the near kiss, very quiet end, and you get the effect again, big time, so you favour the mic that is the most central, and the comb filtering drops back. These people who do this automatically, like the best helicopter pilots, really earn their money. When you see them doing this in a big chorus number, the number of live mics rises drastically, yet they seem to be doing less as they are spaced from each other more distantly, then suddenly the choreography brings them closer and their fingers suddenly fly. One guy I work with uses automation to bring the critical clusters of faders to his VCA section so he only works with a few at a time, but steps through the scenes as the songs progress, and voices add, or are removed. He tells me he's become very good at spotting the comb filtering starting, in the same way we can detect feedback before it actually starts and sort it. I've listened and while it's easy with two close actors speaking it's impossible to my ears once the orchestra kick in - but he can hear it. Year ago, my first experience was with shotguns across a stage front - all those 'torch' type patterns firing upstage. Equidistant spacing, but the narrow pattern meaning more gain and more reach. I didn't even know the weird sound WAS comb filtering back then.

kmetal, post: 462233, member: 37533 wrote: Well said. Very infor

kmetal, post: 462233, member: 37533 wrote: Well said. Very informative.

I've heard some FM receivers (like car radios) switch to mono when they reach a certain range from the broadcast station, in order to improve reception. I guess mono summing boosts the signal somehow?

Just fyi since you mentioned that pluggin specifically and we were discussing oversampling of pluggins in the other thread. I came across a thread where Dirk from Bx said "some of our pluggins use oversampling, the bx_digital v2 does not." The code is nearly identical on the native and dsp versions. Just fwiw.

Interesting stuff. Now stereo wideners and keyboards/vsti that are not true stereo are culprits of mono surprises.

Its amazing that mono still remains relavant as ever (to me at least) since now much is listened to on phones which aren't all stereo.

In the USA, FM broadcasting began in mono, and mono compatible stereo broadcasting was introduced in the late 1950's. The FM stereo signal involves insertion of a 19 kHz "pilot" subcarrier into the main channel, the pilot frequency is doubled, and a sum and difference matrix operating on the 38 kHz subcarrier (like DSSC) generates the stereo information for transmission. The 19 kHz stereo "pilot" occupies around 10% of the transmitted composite FM signal. On the receiver end, the stereo information (in L+R/L-R sum and difference matrix) is demodulated from the received subcarrier and restored to left and right audio.

If the receiver is distant from the desired station, or the receiver cannot detect the 19 kHz stereo "pilot", the receiver will default to mono reception.

One more comment: Do not expect a satisfactory quality sound when audio loaded with phasing issues is transmitted using a sum and difference system. Ideally, perfectly balanced "mono" transmitted through a sum and difference system should sum to perfect balanced "mono" at the receiver. (L-R (difference) component -40 dB or better like -60 dB seen on the modulation monitor at the transmitter; the better (less L-R difference component), the better the separation when true stereo is transmitted.)

bouldersound, post: 462238, member: 38959 wrote: Very much like

bouldersound, post: 462238, member: 38959 wrote: Very much like how the color information was squeezed into the originally monochrome TV signal.

Correct...the original analog NTSC video format was monochrome. Transmitted over the air, the signal was amplitude modulation (A5). The sound was inserted around 4.5 MHz from the lower range of the 6 MHz wide channel and was frequency modulated. When color was added, the color burst and other color material for that frame was inserted at 3.58 MHz from the bottom of the channel and was phase modulated. Black and white receivers ignored the color burst information.

The newer HDTV digital signal contains all the video, RGB, and audio in the digital word, and is NOT compatible with the older NTSC format. There may be converters out there to take a NTSC signal from older equipment and convert it digitally into a HDTV signal. TV stations had to spend a lot of money to make the required (in the USA) conversion to HDTV. Consumers spent a lot of money on new TV receivers. Cable set top boxes regenerated the NTSC signal from digital to allow subscribers with older TV receivers to use their equipment with the cable.

And in another analogy between stereo signals and video, I still

And in another analogy between stereo signals and video, I still make my videos meet NTSC broadcast legal color requirements. If I use full scale contrast I've found that the blacks and whites clip when I display it on my TV, even though the signal path is completely digital. It's a very similar situation to keeping audio mono compatible.

You see frequencies moves in different wave lengths in the air a

You see frequencies moves in different wave lengths in the air and therefor they travel at different speed. A cancellation occurs when using 2 or more mics and the sound hits the mics at different time which results in inverted polarity of a frequency.

The closer your mics are should result in less cancellation

One way is to put the source at equal distance of the microphones. A X/Y technic is easy to do and give great results. (better done with 2 identical mic/preamp)